Machine Learning

Contents

- Prerequisites

- Linear Regression & Cost Functions

- Perceptron Classification Algorithm

- Probabilistic Binary Classification with Logistic Regression

- K-Nearest Neighbors Classification

- Generalized Linear Models (GLMs)

- Generative Learning Algorithms: Gaussian Discriminant Analysis

- Naive Bayes & Laplace Smoothing

- Kernel Methods

- Support Vector Machines (SVMs)

- Deep Learning: Neural Networks & Backpropagation

- Decision Trees

- K-Means Clustering

- Gausian Mixture Model & EM Algorithm

- Factor & Principal Component Analysis

- Deep Learning: Convolutional & Graph Neural Networks

- Reinforcement Learning

Prerequisites

[Hide]

In general, a learning problem considers a set of $n$ samples of data (possibly multivariate) and then tries to predict properties of unknown data. More specifically, it is about learning some properties of a data set and testing those properties against another data set. A common practice in machine learning is to evaluate an algorithm by splitting a data set into two. We call one of those sets the training set, on which we learn some properties; we call the other set the testing set, on which we test the learned properties.

Notation

We begin by establishing some notation.

- The input variables are usually denoted with the letter $x$ (which lies in the input space $\mathcal{X}$. They are known as the independent variables or explanatory variables.

- The outputs are denoted with the letter $y$ (lying in the output space $\mathcal{Y}$). They ar knwn as the dependent variables or response variables.

Types of Machine Learning Algorithms

Learning problems fall into a few categories:

- In supervised learning, each example in the training set is a pair consisting of an input object and a desired output value (aka supervisory signal). A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used to mapping new examples. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way, ideally producing correct results. More mathematically, our goal is, given a training set, to learn the hypothesis function

\[h: \mathcal{X} \longrightarrow \mathcal{Y}\]

that is a good predictor for the corresponding value of $y$.

- Classification: Samples belong to two or more classes and we want to learn from already labeled data how to predict the class of unlabeled data. Examples include handwritten digit recognition, in which the aim is to assign each input vector to one of a finite number of discrete categories. Another way to think of classification is as a discrete (as opposed to continuous) form of supervised learning where one has a limited number of categories.

- Regression: If the desired output consists of one or more continuous variables, then the task is called regression. An example of a regression problem would be the prediction of the length of a salmon as a function of its age and weight.

- In unsupervised learning, the algorithm is not provided with pre-assigned labels or scores for the training data. As a result, unsupervised learning algorithms must first self-discover any naturally occuring patterns in that training set. Common examples include clustering, where the algorithm automatically groups its training examples into categories with similar features, and principal component analysis, where the algorithm finds ways to compress the training data set by identifying which features are most useful for discriminating between different training examples, and discarding the rest.

- In semi-supervised learning, the algorithm is provided with a set that combines a small amount of labeled data with a large amount of unlabeled data during training. More specifically, a set of $l$ samples $x^{(1)}, \ldots, x^{(l)} \in \mathcal{X}$ with following labels $y^{(1)}, \ldots, y^{(l)} \in \mathcal{Y}$ and $u$ unlabaled samples $x^{(l+1)}, \ldots, x^{(l+u)} \in \mathcal{X}$ are processed. The algorithm can work with this by either:

- discarding the unlabeled data and doing supervised learning, or by

- discarding the labels and doing unsupervised learning.

Linear Regression & Cost Functions

[Hide]

Let us assume that both $\mathcal{X}$ and $\mathcal{Y}$ are complete metric spaces (i.e. it is topologically complete). In most cases, this will be such that $\mathcal{X} = \mathbb{R}^d$ and $\mathcal{Y} = \mathbb{R}$. Our goal is to find an (affine) linear hypothesis function $h$ of the form

\[h_\theta (x) \equiv \theta_0 + \sum_{i=1}^d \theta_i x_i\]

where $\theta_0$ is the translation factor. For simplicity of notation, we rewrite the coefficients of linear $\theta$ and the input parameters $x$ as

\[\theta = \begin{pmatrix} \theta_0 \\ \theta_1 \\ \theta_2 \\ \vdots \\ \theta_d \end{pmatrix} \;\;\text{ and }\;\;\; x = \begin{pmatrix}1 \\ x_1 \\ x_2 \\ \vdots \\ x_d \end{pmatrix} \]

which gives us

\[h_\theta (x) \equiv \theta^T x\]

Before we go any further, we must actually answer a very fundamental question: How do we define what the best line is? It turns out that how we answer this question has a great effect on what the line is. We could answer this in multiple ways, with answer 2 being most familiar.

Assume that the tangent variables and the inputs are related via the equation (in this case $h_\theta (x) \equiv \theta^T x$): \[y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)}, \;\;\; \text{ with } \varepsilon^{(i)} \sim \mathcal{N}(0, \sigma^2)\] where $\theta^T: \mathbb{R}^{d+1} \longrightarrow \mathbb{R}$ represents a linear map, and the $\varepsilon^{(i)}$ are error terms that capture either unmodeled effects or random noise. Let us further assume that the $\varepsilon^{(i)}$ are distributed iid and Gaussian. The density $p$ of $\varepsilon^{(i)}$ is given by \[p(\varepsilon^{(i)}) \equiv \frac{1}{\sigma \sqrt{2 \pi}} \exp\bigg( -\frac{ (\varepsilon^{(i)})^2}{2 \sigma^2}\bigg)\] These are reasonably justifiable assumptions since we are basically saying that the effects of the errors should be the same for each training sample (the choice of Gaussian is supported by CLT). Now, given that we have all the input samples given $x^{(i)} \in \mathbb{R}^{d+1}$ (remember that $x_0^{(i)} = 1$ trivially to account for the translation term $\theta_0$), this implies that $\theta^T x^{(i)} \in \mathbb{R}$ is already evaluated and given, and so the following distribution of $y^{(i)}$ given $x^{(i)}$ is just a shifted Gaussian. \[y^{(i)}\,|\,x^{(i)} \sim \mathcal{N}\big(\theta^T x^{(i)}, \sigma^2\big) \implies p \big(y^{(i)}\,|\,x^{(i)} \big) \equiv \frac{1}{\sigma \sqrt{2 \pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg) \] for all $i = 1, 2, \ldots, n$. That is, $y^{(i)} \,|\, x^{(i)}$ are all Gaussian distributions centered at $\theta^T x^{(i)}$ and variance of $\sigma^2$. Since these are all iid, we can create a joint distribution of all $y^{(i)}$s, encoded into a single $y$, defined with the multivariate density $P$: \[P(y\,|\,X) \equiv P\Bigg( \begin{pmatrix} y^{(1)} \\ y^{(2)} \\ \vdots \\ y^{(n)} \end{pmatrix} \, \Bigg| \, \begin{pmatrix} - & (x^{(2)})^T & -\\- & (x^{(1)})^T & -\\\vdots & \vdots & \vdots \\- & (x^{(n)})^T & - \end{pmatrix} \Bigg) \equiv \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg)\] That is, given a set of input vectors $x^{(1)}, x^{(2)}, \ldots, x^{(n)}$ all encoded in $n \times (d+1)$ matrix $X$, $P: \mathbb{R}^n \longrightarrow \mathbb{R}$ is a probability density function that outputs the probability of the sample outputs being $y^{(1)}, y^{(2)}, \ldots, y^{(n)}$, i.e. the probability of the output vector being $y$. The distribution is centered at vector \[\begin{pmatrix} \theta^T x^{(1)} \\ \theta^T x^{(2)} \\ \vdots \\ \theta^T x^{(n)} \end{pmatrix} = X \theta \in \mathbb{R}^n\] Let's get a bit more abstract. So far, we have treated $\theta$ and $X$ as the givens and treated the density as a function of $y$. If we treat $X$ and $y$ as givens and $\theta$ as the input, then we get a likelihood function $L$, defined (really, the same): \[L(\theta) \equiv \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg)\] That is, $L$ takes in a value of $\theta$ that represents a certain linear best fit model $h$, and it tells us the probability of output $y$ happening given inputs $X$ in the form of a density value.

Now, given this probabilistic model relating the $y^{(i)}$s to the $x^{(i)}$s, it is logically obvious to choose $\theta$ such that $L(\theta)$ is as high as possible so that we would get the $\theta$ value that has the greatest probability of outputting $y$ given data $X$. But this is the same thing as maximizing the log likelihood $l(\theta)$ \begin{align*} l(\theta) & = \log L(\theta) \\ & = \log \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( -\frac{(y^{(i)} - \theta^T x^{(i)})^2}{2\sigma^2} \bigg) \\ & = \sum_{i=1}^n \log \frac{1}{\sigma \sqrt{2 \pi}} \exp \bigg( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2\sigma^2} \bigg) \\ & = - \frac{n}{\sigma^2} \, \log \bigg(\frac{1}{\sigma \sqrt{2\pi}}\bigg) \cdot \frac{1}{2} \sum_{i=1}^n \big( y^{(i)} - \theta^T x^{(i)} \big)^2 \end{align*} Hence, maximizing $l(\theta)$ gives the same answer as minimizing (due to the negative sign). Note that our final choice of $\theta$ does not depend on $\sigma$. \[J(\theta) \equiv \frac{1}{2} \sum_{i=1}^n \big( y^{(i)} - \theta^T x^{(i)}\big)^2 \] Therefore, under the previous probabilistic assumptions of the data, least-squares regression corresponds to finding the maximum likelihood estimate of $\theta$. A different set of assumptions will lead to a different likelihood function and therefore a different cost function.

- The line of best fit is the line where the sum of the residuals between the predicted values and the data points is minimized. This is known as least absolute deviations/value/errors/residual (LAD, LAV, LAE, LAR) or the $L_1$-norm condition.

- The line of best fit is the line where the sum of the squares of the residuals is minimized. This is known as (ordinary) least squares.

- The line of best fit is the line where the sum of the squares of the redsiduals, with each residual scaled by a weight, is minimized. This can place a higher "priority" on certain data points when fitting a line.

Derivation of the Least Squares Cost Function

The cost function

\[J(h_\theta) \equiv \frac{1}{2} \sum_{i=1}^n \big( h_\theta(x^{(i)}) - y^{(i)} \big)^2\]

introduced in the next section may seem quite arbitrary. Why must this specific function be minimized? What is so special about this least squares, and why does minimizing this function necessarily give the line of best fit? How do we even define what exactly the line of best fit is? We will answer all these questions here.

Assume that the tangent variables and the inputs are related via the equation (in this case $h_\theta (x) \equiv \theta^T x$): \[y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)}, \;\;\; \text{ with } \varepsilon^{(i)} \sim \mathcal{N}(0, \sigma^2)\] where $\theta^T: \mathbb{R}^{d+1} \longrightarrow \mathbb{R}$ represents a linear map, and the $\varepsilon^{(i)}$ are error terms that capture either unmodeled effects or random noise. Let us further assume that the $\varepsilon^{(i)}$ are distributed iid and Gaussian. The density $p$ of $\varepsilon^{(i)}$ is given by \[p(\varepsilon^{(i)}) \equiv \frac{1}{\sigma \sqrt{2 \pi}} \exp\bigg( -\frac{ (\varepsilon^{(i)})^2}{2 \sigma^2}\bigg)\] These are reasonably justifiable assumptions since we are basically saying that the effects of the errors should be the same for each training sample (the choice of Gaussian is supported by CLT). Now, given that we have all the input samples given $x^{(i)} \in \mathbb{R}^{d+1}$ (remember that $x_0^{(i)} = 1$ trivially to account for the translation term $\theta_0$), this implies that $\theta^T x^{(i)} \in \mathbb{R}$ is already evaluated and given, and so the following distribution of $y^{(i)}$ given $x^{(i)}$ is just a shifted Gaussian. \[y^{(i)}\,|\,x^{(i)} \sim \mathcal{N}\big(\theta^T x^{(i)}, \sigma^2\big) \implies p \big(y^{(i)}\,|\,x^{(i)} \big) \equiv \frac{1}{\sigma \sqrt{2 \pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg) \] for all $i = 1, 2, \ldots, n$. That is, $y^{(i)} \,|\, x^{(i)}$ are all Gaussian distributions centered at $\theta^T x^{(i)}$ and variance of $\sigma^2$. Since these are all iid, we can create a joint distribution of all $y^{(i)}$s, encoded into a single $y$, defined with the multivariate density $P$: \[P(y\,|\,X) \equiv P\Bigg( \begin{pmatrix} y^{(1)} \\ y^{(2)} \\ \vdots \\ y^{(n)} \end{pmatrix} \, \Bigg| \, \begin{pmatrix} - & (x^{(2)})^T & -\\- & (x^{(1)})^T & -\\\vdots & \vdots & \vdots \\- & (x^{(n)})^T & - \end{pmatrix} \Bigg) \equiv \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg)\] That is, given a set of input vectors $x^{(1)}, x^{(2)}, \ldots, x^{(n)}$ all encoded in $n \times (d+1)$ matrix $X$, $P: \mathbb{R}^n \longrightarrow \mathbb{R}$ is a probability density function that outputs the probability of the sample outputs being $y^{(1)}, y^{(2)}, \ldots, y^{(n)}$, i.e. the probability of the output vector being $y$. The distribution is centered at vector \[\begin{pmatrix} \theta^T x^{(1)} \\ \theta^T x^{(2)} \\ \vdots \\ \theta^T x^{(n)} \end{pmatrix} = X \theta \in \mathbb{R}^n\] Let's get a bit more abstract. So far, we have treated $\theta$ and $X$ as the givens and treated the density as a function of $y$. If we treat $X$ and $y$ as givens and $\theta$ as the input, then we get a likelihood function $L$, defined (really, the same): \[L(\theta) \equiv \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( - \frac{\big(y^{(i)} - \theta^T x^{(i)}\big)^2}{2 \sigma^2}\bigg)\] That is, $L$ takes in a value of $\theta$ that represents a certain linear best fit model $h$, and it tells us the probability of output $y$ happening given inputs $X$ in the form of a density value.

Now, given this probabilistic model relating the $y^{(i)}$s to the $x^{(i)}$s, it is logically obvious to choose $\theta$ such that $L(\theta)$ is as high as possible so that we would get the $\theta$ value that has the greatest probability of outputting $y$ given data $X$. But this is the same thing as maximizing the log likelihood $l(\theta)$ \begin{align*} l(\theta) & = \log L(\theta) \\ & = \log \prod_{i=1}^n \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( -\frac{(y^{(i)} - \theta^T x^{(i)})^2}{2\sigma^2} \bigg) \\ & = \sum_{i=1}^n \log \frac{1}{\sigma \sqrt{2 \pi}} \exp \bigg( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2\sigma^2} \bigg) \\ & = - \frac{n}{\sigma^2} \, \log \bigg(\frac{1}{\sigma \sqrt{2\pi}}\bigg) \cdot \frac{1}{2} \sum_{i=1}^n \big( y^{(i)} - \theta^T x^{(i)} \big)^2 \end{align*} Hence, maximizing $l(\theta)$ gives the same answer as minimizing (due to the negative sign). Note that our final choice of $\theta$ does not depend on $\sigma$. \[J(\theta) \equiv \frac{1}{2} \sum_{i=1}^n \big( y^{(i)} - \theta^T x^{(i)}\big)^2 \] Therefore, under the previous probabilistic assumptions of the data, least-squares regression corresponds to finding the maximum likelihood estimate of $\theta$. A different set of assumptions will lead to a different likelihood function and therefore a different cost function.

Least-Squares Linear Regression with Normal Equations

We can encode each of the $n$ data points in a $n \times (d+1)$ matrix and the $y$ output point in a $n$-vector of forms:

\[X = \begin{pmatrix} x^{(1)} \\ x^{(2)} \\ x^{(3)} \\ \vdots \\ x^{(n)} \end{pmatrix} =

\begin{pmatrix}

1 & x^{(1)}_1 & x^{(1)}_2 & \ldots & x^{(1)}_d \\

1 & x^{(2)}_1 & x^{(2)}_2 & \ldots & x^{(2)}_d \\

1 & x^{(3)}_1 & x^{(3)}_2 & \ldots & x^{(3)}_d \\

\vdots & \vdots & \vdots & \ddots & \vdots\\

1 & x^{(n)}_1 & x^{(n)}_2 & \ldots & x^{(n)}_d

\end{pmatrix}, \;\;\;\; y = \begin{pmatrix} y^{(1)} \\ y^{(2)} \\ y^{(3)} \\ \vdots \\ y^{(n)} \end{pmatrix}\]

and the problem now simplifies into finding the least-squares solution to

\[X \theta = y\]

which can be solved by solving the normal equation for $\theta$:

\[X^T X \theta = X^T y \implies \theta = (X^T X^{-1}) X^T y\]

Remember that this formula only works under the least-squares assumption. The proof for this formula can be found in many linear algebra textbooks, so it will not be shown here. Let us take a look at this from a computational perspective.

>>> import numpy as np

>>> X0 = np.ones((1000, 1))

>>> X1 = 2 * np.random.rand(1000, 1) #1000 random input points from 0~2

>>> X2 = 3 * np.random.rand(1000, 1) + 4 #1000 random input points from 4~7

>>> X = np.hstack((X0, np.hstack((X1, X2)))) #Make input data matrix X

>>> X.shape

(1000, 3)

>>> Y = 7 - 3*X1 + 12*X2 + np.random.rand(1000, 1) #1000 output points that are linearly coorelated with the inputs with a little bit of noise added

>>> Y.shape

(1000, 1)

>>> print(np.linalg.lstsq(X, Y, rcond=None))

(array([[ 7.57196006],

[-3.00718055],

[11.98805313]]), array([84.86198347]), 3, array([184.74453474, 18.76367431, 4.44033133]))

We can see that the solution is computed to be

\[\theta = \begin{pmatrix}

7.57196006 \\ -3.00718055 \\ 11.98805313 \end{pmatrix} \implies h_\theta = 7.57196006 -3.00718055 x_1 + 11.98805313 x_2\]

Least-Squares Linear Regression with Batch & Stochastic Gradient Descent

Still under the method of least squares, we still use the least-squares cost function $J$. Letting $d_\mathcal{Y}$ be the metric of $\mathcal{Y}$, the first equation represents the function in full generality, while the second and third lines assume that $\mathcal{Y} = \mathbb{R}^d$ and $\mathcal{Y} = \mathbb{R}$, respectively.

\begin{align*}

J(h_\theta) & \equiv \frac{1}{2} \sum_{i=1}^n \Big(d_\mathcal{Y}\big( h_\theta(x^{(i)}), y^{(i)}\big)\Big)^2 \\

& \equiv \frac{1}{2} \sum_{i=1}^n \big|\big|h_\theta(x^{(i)}) - y^{(i)} \big|\big|^2 \\

& \equiv \frac{1}{2} \sum_{i=1}^n \big( h_\theta(x^{(i)}) - y^{(i)} \big)^2

\end{align*}

Therefore, $J: \mathcal{Y} \longrightarrow \mathbb{R}^+$ ($J: \mathbb{R}^{d+1} \longrightarrow \mathbb{R}^+$) is a (hopefully) smooth function. This smoothness criterion allows us to do calculus on it, and to minimize $J$ (i.e. get it at close to $0$ as possible since $J \geq 0$), we start off an initial $\theta \in \mathbb{R}^{d+1}$ (since we have to account for the value of $\theta_0$) and go in the direction opposite of that of the gradient. By abuse of notation (in computer science notation), the algorithm basically reassigns $\theta$ using the iterative algorithm:

\[\theta = \theta - \alpha \nabla J(\theta)\]

where $\alpha$ is a scalar constant called the learning rate (i.e. how large each step is going to be). This actually requires us to compute the gradient, which we can easily do using chain rule (we derive it with respect to index $j$ to prevent confusion between the summation index and partial derivative index):

\begin{align*}

\frac{\partial}{\partial \theta_j} J(\theta) & = \frac{\partial}{\partial \theta_j} \bigg(\frac{1}{2} \sum_{i=1}^n \Big( h_\theta (x^{(i)}) - y^{(i)} \Big)^2 \bigg) \\

& = \frac{1}{2} \sum_{i=1}^n \frac{\partial}{\partial \theta_j} \bigg( \Big( h_\theta (x^{(i)}) - y^{(i)}\Big)^2 \bigg) \\

& = \frac{1}{2} \sum_{i=1}^n 2 \Big( h_\theta (x^{(i)}) - y^{(i)}\Big) \cdot \frac{\partial}{\partial \theta_j} \big( h_\theta (x^{(i)}) - y^{(i)} \big) \\

& = \sum_{i=1}^n \big( h_\theta (x^{(i)}) - y^{(i)}\big) \cdot x_j^{(i)} \;\;\;\;\;(\text{for } j = 0, 1, \ldots, d)

\end{align*}

which can be simplified as the dot product

\[\frac{\partial}{\partial \theta_j} J(h_\theta) = \begin{pmatrix}

h_\theta(x^{(1)}) - y^{(1)} \\

h_\theta(x^{(2)}) - y^{(2)} \\

\vdots \\

h_\theta(x^{(n)}) - y^{(n)}

\end{pmatrix} \cdot \begin{pmatrix}

x_j^{(1)} \\ x_j^{(2)} \\ \vdots \\ x_j^{(n)}

\end{pmatrix} \;\;\;\;\; \text{ for } j = 0, 1, 2, \ldots, d\]

and even better, the entire gradient can be simplified as

\[\nabla J(\theta) = \begin{pmatrix}

1 & x^{(1)}_1 & x^{(1)}_2 & \ldots & x^{(1)}_d \\

1 & x^{(2)}_1 & x^{(2)}_2 & \ldots & x^{(2)}_d \\

1 & x^{(3)}_1 & x^{(3)}_2 & \ldots & x^{(3)}_d \\

\vdots & \vdots & \vdots & \ddots & \vdots\\

1 & x^{(n)}_1 & x^{(n)}_2 & \ldots & x^{(n)}_d

\end{pmatrix} \begin{pmatrix}

h_\theta(x^{(1)}) - y^{(1)} \\

h_\theta(x^{(2)}) - y^{(2)} \\

h_\theta(x^{(3)}) - y^{(3)} \\

\vdots \\

h_\theta(x^{(n-1)}) - y^{(n-1)} \\

h_\theta(x^{(n)}) - y^{(n)}

\end{pmatrix} = X \Bigg(\begin{pmatrix}

h_\theta(x^{(1)}) \\

\vdots \\

h_\theta(x^{(n)})

\end{pmatrix} - y \Bigg)\]

Therefore, we can simplify $\theta = \theta - \alpha \nabla J(\theta)$ into

\[\theta = \theta - \alpha \; X \Bigg(\begin{pmatrix}

h_\theta(x^{(1)}) \\

\vdots \\

h_\theta(x^{(n)})

\end{pmatrix} - y \Bigg)\]

which can easily be done on a computer using a package like numpy. Remember that GD is really just an algorithm that updates $\theta$ repeatedly until convergence, but there are a few problems.

- The algorithm can be susceptible to local minima. A few countermeasures include shuffling the training set or randomly choosing initial points $\theta$.

- The algorithm may not converge if $\alpha$ (the step size) is too high, since it may overshoot. This can be solved by reducing the $\alpha$ with each step.

- The entire training set may be too big, and it may therefore be computationally expensive to update $\theta$ as a whole, especially if $d >> 1$. This can be solved using stochastic gradient descent.

Derivation of Least Absolute Deviation Cost Function

Even though most linear fitting is done under the least squares assumption, we will take a look at the least absolute derivations (LAD) method. Our set of assumptions are slightly different here. Like before, the response variables and the inputs are related via the equation (in this case $h_\theta (x) \equiv \theta^T x$):

\[y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)}\]

but now, the error terms $\varepsilon^{(i)} \sim \text{Laplace}(0, b)$ are Laplace distributions, also called double exponential distributions (since it can be thought of as two exponential distributions spliced together back-to-back). The density $p$ of $\varepsilon^{(i)}$ is given by

\[p(\varepsilon^{(i)}) \equiv \frac{1}{2b} \exp \bigg(-\frac{|x - \mu|}{b}\bigg)\]

After some derivation for maximizing the likelihood function, we get the cost function to be

\[J(\theta) \equiv \frac{1}{2} \sum_{i=1}^n \big| y^{(i)} - \theta^T x^{(i)}\big|\]

Unfortunately, the LAD line is not as simple to compute efficiently. In fact, LAD regression does not have an analytical solving method (like the normal equations), and so an iterative approach like GD is required. The GD process is exactly the same as that for GD in the least-squares context.

Polynomial Regression of One Scalar Input Attribute

Polynomial regression is a form of regression in which the relationship between the independent variable $x$ and the dependent variable $y$ is modelled as an $n$th degree polynomial in $x$. Beginning with both $\mathcal{X} = \mathbb{R}$ (and of course, $\mathcal{Y} = \mathbb{R}$), the linear model

\[y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)} = \begin{pmatrix} \theta_0 & \theta_1 \end{pmatrix} \begin{pmatrix} 1 \\ x^{(i)} \end{pmatrix} + \varepsilon^{(i)} = \theta_0 + \theta_1 x^{(i)} + \varepsilon^{(i)}\]

is used. However, we may have to model quadratically using the following

\[y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)} = \begin{pmatrix} \theta_0 & \theta_1 & \theta_2 \end{pmatrix} \begin{pmatrix} 1 \\ x^{(i)} \\ x^{(i) 2} \end{pmatrix} + \varepsilon^{(i)} = \theta_0 + \theta_1 x^{(i)} + \theta_2 x^{(i) 2} + \varepsilon^{(i)}\]

and for modeling as an $p$th degree polynomial, we have the general polynomial regression model

\begin{align*}

y^{(i)} = \theta^T x^{(i)} + \varepsilon^{(i)} & = \begin{pmatrix} \theta_0 & \theta_1 & \ldots & \theta_p \end{pmatrix} \begin{pmatrix} 1 \\ x^{(i)} \\ \vdots \\ (x^{(i)})^p \end{pmatrix} + \varepsilon^{(i)} \\

& = \theta_0 + \theta_1 x^{(i)} + \theta_2 (x^{(i)})^2 + \ldots + \theta_p (x^{(i)})^p + \varepsilon^{(i)}

\end{align*}

Conveniently this model is linear with respect to, not just the one variable $x$, but the vector $\big( 1 \;\; x \;\; x^2 \;\; x^3 \;\; \ldots \;\; x^p\big)^T$, which can be calculated with what we call the feature map $\phi: \mathbb{R} \longrightarrow \mathbb{R}^{p+1}$, which is defined

\[\phi: x \mapsto \begin{pmatrix} 1 \\ x \\ \ldots \\ x^p \end{pmatrix} \]

So treating $x, x^2, \ldots$ as being distinct independent variables in a multiple regression model, we can carry on normally. For clarification of terminology,

- the original input value $x$ will be called the input attributes of the problem.

- The mapped variables $\phi(x) \in \mathbb{R}^{p+1}$ will be called the feature variables.

Polynomial Regression with Multiple Scalar Input Attributes

Let us have $\mathcal{X} = \mathbb{R}^d$, with the input attributes being not $x$, but represented by the vector

\[\begin{pmatrix} x_1 \\ \vdots \\ x_d \end{pmatrix}\]

If we want to model it using a $p$th degree multivariate polynomial, we can use the feature map $\phi: \mathbb{R}^d \longrightarrow \mathbb{R}^{\tilde{p}}$ to map it to a vector containing all monomials of $x$ with degree $\leq p$. For example, with $d=2$ and $p$ still a variable, $\phi$ would be defined

\[\phi: \begin{pmatrix} x_1 \\ x_2 \end{pmatrix} \mapsto

\begin{pmatrix} 1 \\ x_1 \\ x_2 \\ x_1^2 \\ \vdots \\ x_1^2 x_2^{p-2} \\ x_1 x_2^{p-1} \\ x_2^{p} \end{pmatrix}\]

and we can see that the dimensionality of the codomain is $\tilde{p} = p(p+1)/2$. When we set $d$ as a variable, the dimensionality of the codomain is on the order of $d^p$, which becomes very large even for small values of $d$ and $p$. However, the input attributes (notice that unlike the previous case, $x \in \text{Mat}(n \times d, \mathbb{R}$ since the samples are $d$-vectors. )

\[x = \begin{pmatrix} — & x^{(1)} & — \\ — & x^{(2)} & — \\ — & x^{(3)} & — \\ \vdots & \vdots & \vdots \\ — & x^{(n)} & — \end{pmatrix} = \begin{pmatrix}

x_1^{(1)} & x_2^{(1)} & \ldots & x_d^{(1)} \\

x_1^{(2)} & x_2^{(2)} & \ldots & x_d^{(2)} \\

\vdots & \vdots & \ddots & \vdots \\

x_1^{(n)} & x_2^{(n)} & \ldots & x_d^{(n)}

\end{pmatrix}\]

become mapped to the input features encoded in a $n \times \tilde{p}$ matrix.

\begin{align*}

X & = \begin{pmatrix}

— & \phi(x^{(1)}) & — \\

— & \phi(x^{(2)}) & — \\

\vdots & \vdots & \vdots \\

— & \phi(x^{(n)}) & —

\end{pmatrix} \\

& = \begin{pmatrix}

1 & x_1^{(1)} & x_2^{(1)} & \ldots & (x_1^{(1)})^2 (x_2^{(1)})^3 (x_d^{(1)}) & \ldots & (x_d^{(1)})^p \\

1 & x_1^{(2)} & x_2^{(2)} & \ldots & (x_1^{(2)})^2 (x_2^{(2)})^3 (x_d^{(2)}) & \ldots & (x_d^{(2)})^p \\

\vdots & \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\

1 & x_1^{(n)} & x_2^{(n)} & \ldots & (x_1^{(n)})^2 (x_2^{(n)})^3 (x_d^{(n)}) & \ldots & (x_d^{(n)})^p

\end{pmatrix}

\end{align*}

and we solve the linear system $X\theta = y$ for $\theta$ to find the multivariable polynomial of best fit.

Perceptron Classification Algorithm

[Hide]

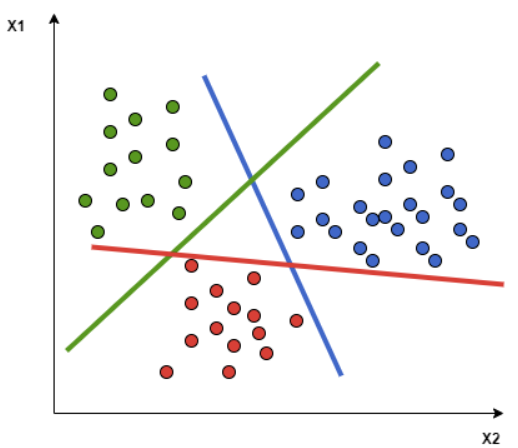

The perceptron algorithm is a classification algorithm for learning a binary classifier with a threshold function. It basically takes the input space $\mathcal{X} = \mathbb{R}^d$ and divides it into two with a hyperplane. If an input point lands in one side of the hyperplane, it gets classified into $0$, and the other $1$. More mathematically, given the input space $\mathcal{X} = \mathbb{R}^d$ and the output space $\mathcal{Y} = \{0, 1\}$, the function $f: \mathbb{R}^d \longrightarrow \{0, 1\}$ is defined

\[f (x) \equiv \begin{cases}

1 & \text{ if } w \cdot x > b \\

0 & \text{ if } w \cdot x \leq b

\end{cases}\]

where $w$ is a vector of real-valued weights (which is really the normal vector of the hyperplane), $w \cdot x$ is the dot product (representing the hyperplane), and $b$ is the bias (the translation factor of the hyperplane). Note that this is similar to a heaviside step function. We can clearly see that the set

\[\{x \in \mathbb{R}^d \;|\; w \cdot x = b\}\]

is a hyperplane in $\mathbb{R}^d$. Now the question remaining is how do we know which hyperplane to choose? That is, how do we find the optimal (whatever that may mean) vectors $w$ and $b$?

Linear Regression Perceptron

Given that we have $n$ input data points with $d$ parameters, say that the output space $\mathcal{Y}$ is discrete, consisting of two classifications: Orange and Blue. These classes can be coded as a binary variable Orange=1 and Blue=0, and then fit the data as if it were a regular linear regression to get predictor function $h_\theta$. Now, given any arbitrary point in $x \in \mathcal{X}$, we can determine whether the value of $h_\theta (x)$ is closer to $0$ or $1$ and classify accordingly. That is,

\begin{align*}

d\big( h_\theta (x), 0 \big) \geq d\big( h_\theta (x), 1 \big) & \implies x \text{ is Orange} \\

d\big( h_\theta (x), 0 \big) < d\big( h_\theta (x), 1 \big) & \implies x \text{ is Blue}

\end{align*}

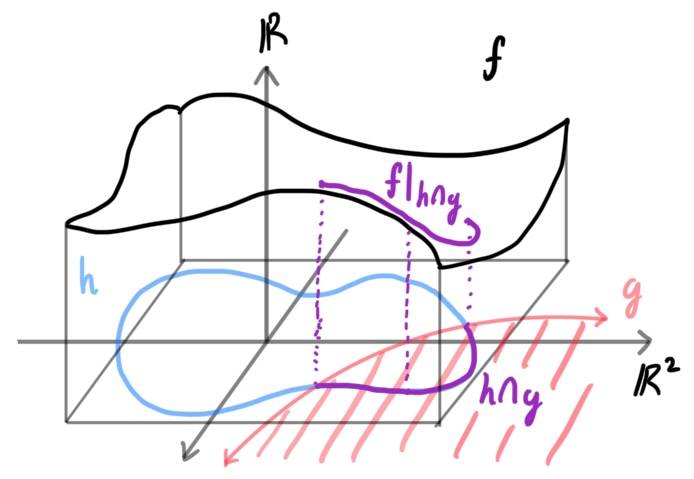

Visually, we can think of $h_\theta$ as a hyperplane of $\mathcal{X} = \mathbb{R}^d$ that divides $\mathcal{X}$ into two "half-spaces." If a point lands in one half-space, then it gets classified into one bin, and if it lands on the other half-space, then it gets classified into the other bin. This process may be unstable for processes with a higher number of bins.

Probabilistic Binary Classification with Logistic Regression

[Hide]

The perceptron algorithm is a function that takes an input value and outputs either $0$ or $1$, but there is no sense of uncertainty. What if we wanted a probability of an input vector being in class $0$ or $1$? Rather than outputting either $0$ or $1$, an algorithm that outputs a 2-tuple $(p, 1-p)$ that represents the probability that it is in $0$ and $1$ (e.g. $(0.45, 0.55)$). This is where logistic regression comes in. Recall that the formula for the logistic function $f: \mathbb{R} \longrightarrow (0, 1)$ is:

\[f(x) \equiv \frac{L}{1 + e^{-k(x - x_0)}}\]

where $L$ is the curve's maximum value, $k$ is the logistic growth rate or steepness of the curve, and $x_0$ is the value of the sigmoid midpoint. What makes the logistic function right for this type of problem is that it's range is $(0, 1)$, with the asymptotic behavior towards $y = 0$ and $y = 1$. We can adjust our hypothesis $h$ to the logistic equation

\[h_\theta (x) \equiv g\big( \theta^T x\big) \equiv \frac{1}{1 + e^{-\theta^T x}}\]

Note that this is just a composition of the functions

\[x \mapsto \theta^T x \;\;\;\text{ and }\;\;\; x \mapsto \frac{1}{1 + e^{-x}}\]

![]() We extend this into a $d$-dimensional space. Given the $d$-dimensional point $x$ (note that we did not set $x_0 = 0$), the function (which does add that $x_0$ term), which we will briefly call $\mathcal{L}$, is defined

\[\mathcal{L}: x = \begin{pmatrix} x_1 \\ \vdots \\ x_d \end{pmatrix} \mapsto \theta^T \tilde{x} = \begin{pmatrix} \theta_0 & \ldots & \theta_d \end{pmatrix} \begin{pmatrix} 1 \\ x_1 \\ \vdots \\ x_d \end{pmatrix}\]

It basically means that we first attach the $x_0 = 1$ term to create a $(d+1)$-dimensional vector, and then we dot product it with $\theta$. This definition naturally constructs the affine hyperplane of $\mathbb{R}^d$, defined

\[H \equiv \{ x \in \mathbb{R}^d \;|\; \mathcal{L}(x) = 0\}\]

If $d$-dimensional $x$ is in $H \subset \mathbb{R}^d$, then $\mathcal{L}(x) = 0$. But this output $0$ is inputted into the logistic function

\[g(0) = \frac{1}{1 + e^{-0}} = \frac{1}{2}\]

This means that set of points in $H$ represents the input values where the regression algorithm will output exactly $(0.5, 0.5)$. We can construct the quotient space

\[\mathbb{R}^d / H\]

and easily visualize the gradient, which lightens and darkens as we move from hyperplane to hyperplane.

We extend this into a $d$-dimensional space. Given the $d$-dimensional point $x$ (note that we did not set $x_0 = 0$), the function (which does add that $x_0$ term), which we will briefly call $\mathcal{L}$, is defined

\[\mathcal{L}: x = \begin{pmatrix} x_1 \\ \vdots \\ x_d \end{pmatrix} \mapsto \theta^T \tilde{x} = \begin{pmatrix} \theta_0 & \ldots & \theta_d \end{pmatrix} \begin{pmatrix} 1 \\ x_1 \\ \vdots \\ x_d \end{pmatrix}\]

It basically means that we first attach the $x_0 = 1$ term to create a $(d+1)$-dimensional vector, and then we dot product it with $\theta$. This definition naturally constructs the affine hyperplane of $\mathbb{R}^d$, defined

\[H \equiv \{ x \in \mathbb{R}^d \;|\; \mathcal{L}(x) = 0\}\]

If $d$-dimensional $x$ is in $H \subset \mathbb{R}^d$, then $\mathcal{L}(x) = 0$. But this output $0$ is inputted into the logistic function

\[g(0) = \frac{1}{1 + e^{-0}} = \frac{1}{2}\]

This means that set of points in $H$ represents the input values where the regression algorithm will output exactly $(0.5, 0.5)$. We can construct the quotient space

\[\mathbb{R}^d / H\]

and easily visualize the gradient, which lightens and darkens as we move from hyperplane to hyperplane.

Visualization Through Quotient Vector Spaces

There is a very nice way to visualize this. Consider the one-dimensional logistic function

\[g(z) \equiv \frac{1}{1 + e^{-z}}\]

which has range $(0, 1)$ and $g(z) \rightarrow 1$ and $z \rightarrow \infty$ and $g(z) \rightarrow 0$ as $z \rightarrow -\infty$. Rather than imagining it as the regular sigmoid in $\mathbb{R}^2$, we can just imagine the domain $\mathbb{R}$ itself and "color" it in greyscale, representing $0$ as white and $1$ as black. Then, we should visualize the real line as a gradient that gets more and more white (but not completely white) as $z \rightarrow -\infty$ and more and more black (but not completely black) as $z \rightarrow \infty$.

Gradient Descent

Now, given that

\[h_\theta (x) \equiv \frac{1}{1 + e^{-\theta^T x}}\]

(or really for any function $h_\theta: \mathbb{R}^{d+1} \longrightarrow [0,1]$) such that

\begin{align*}

\mathbb{P}(y = 1\;|\; x; \theta) & = h_\theta (x) \\

\mathbb{P}(y = 0\;|\; x; \theta) & = 1 - h_\theta (x)

\end{align*}

which can be written more compactly as below. Remember, the notation means the probability of $y$ given $x$ and where $\theta$ is a given.

\[p(y\,|\,x; \theta) = \big( h_\theta (x)\big)^y \; \big( 1 - h_\theta (x)\big)^{1-y}\]

Assuming that the $n$ training samples were generated independently, we can then write down the likelihood of the parameters as

\begin{align*}

L(\theta) & = p(y\;|\; X;\theta) \\

& = \prod_{i=1}^n p(y^{(i)} \;|\; x^{(i)} ; \theta) \\

& = \prod_{i=1}^n \big( h_\theta (x^{(i)})\big)^{y^{(i)}} \big( 1 - h_\theta (x^{(i)})\big)^{1-y^{(i)}}

\end{align*}

As before, it is simpler to maximize the log likelihood

\begin{align*}

l(\theta) & = \log L(\theta) \\

& = \sum_{i=1}^n y^{(i)} \log h_\theta (x^{(i)}) + (1 - y^{(i)}) \log (1 - h_\theta (x^{(i)}))

\end{align*}

But since there is no negative sign in front of the whole summation, we can simply just use gradient ascent to maximize $l$ (The subscript $\theta$ on $\nabla$ is there to clarify that the gradient is computed with respect to the $\theta$, not $X$).

\[\theta = \theta + \nabla_\theta l(\theta)\]

But since $g^\prime (z) = g(z)\, (1 - g(z))$, we can derive the gradient of $l: \mathbb{R}^{d+1} \longrightarrow \mathbb{R}$ with its partials

\begin{align*}

\frac{\partial}{\partial \theta_j} l(\theta) & = \bigg( y \frac{1}{g(\theta^T x )} - (1-y) \frac{1}{1 - g(\theta^T x)} \bigg) \, \frac{\partial}{\partial \theta_j} g(\theta^T x) \\

& = \bigg( y \frac{1}{g(\theta^T x)} - (1 - y) \frac{1}{1 - g(\theta^T x)} \bigg) g(\theta^T x) (1 - g(\theta^T x)) \frac{\partial}{\partial \theta_j} \theta^T x \\

& = \big( y (1 - g(\theta^T x)\big) - (1 - y) g(\theta^T x)\big) x_j \\

& = \big( y - h_\theta (x)\big) x_j

\end{align*}

Therefore, the stochastic gradient ascent rule is reduced to (vector and coordinate form)

\[\theta = \theta + \alpha \big( y^{(i)} - h_\theta (x^{(i)}\big) \, x^{(i)} \iff \theta_j = \theta_j + \alpha \big( y^{(i)} - h_\theta (x^{(i)}) \big) \, x_j^{(i)}\]

K-Nearest Neighbors Classification

[Hide]

The k-nearest neighbors (KNN) algorithm is a classification method that uses observations closest to $x$ in the input space to form its prediction $h(x)$ (note that $h$ in this case represents a general predictor function, not necessarily linear). Given $n$ training samples $\big( x^{(i)}, y^{(i)} \big) \in \mathbb{R}^d \times \mathcal{Y}$, we would like to classify a point $x_0 \in \mathbb{R}^d$ into one of two bins, numerically labeled $0$ or $1$. Given this point $x_0 \in \mathcal{X}$, let the $k$-closest neighborhood of $x_0$

\[N_k (x_0)\]

be defined as the set of $k$ points in $\mathcal{X}$ that are closest to $x_0$. More mathematically, $N_k (x_0)$ are 15 points $x \in \mathcal{X}$ with the smallest values of $d(x_0, x)$, where $d$ is a well-defined metric of $\mathcal{X}$. With this, our predictor function can be defined as

\[h(x_0) \equiv \begin{cases}

1 & \text{ if } \frac{1}{k} \sum_{x \in N_k (x_0)} x \geq \frac{1}{2}\\

0 & \text{ if } \frac{1}{k} \sum_{x \in N_k (x_0)} x < \frac{1}{2}

\end{cases}\]

This basically means that we take the "average" of the $k$ nearest neighbors of point $x_0$ and depending on which value ($0$ or $1$) it is closer to (i.e. on which side of the $0.5$ value it is on), the function $h$ assigns $x_0$ to the proper bin. We can see that far fewer training observations are misclassified compared to the linear model above.

Parameter Selection

The best choice of $k$ depends on the data:

- Larger values of $k$ reduces the effect of noise on the classification, but make boundaries between classes less distinct. The number of misclassified data points (error) increases.

- Smaller values are more sensitive to noise, but boundaries are more distinct and the number of misclassified data points (error) decreases.

1-Nearest Neighbor Classifier

The 1-nearest neighbor classification algorithm basically takes an input $x_0$ and assigns it the same class as that of the closest point, in $N_1 (x_0)$. It is the most intuitive, with an error of $0$.

Weighted Nearest Neighbour Classifier

The $k$-nearest neighbour classifier can be viewed as assigning the $k$ nearest neighbours a weight $1/k$ and all others $0$ weight. This can be generalised to weighted nearest neighbour classifiers. That is, given point $x_0$, let

\[N_k (x_0) = \{x_{01}, x_{02}, \ldots, x_{0k}\}\]

be the $k$ nearest neighbors, with $x_{0i}$ being the $i$th nearest neighbor, of $x_0$. Additionally, let us assign each $x_{0i}$ a weight $\omega_{0i}$ such that

\[\sum_{i=1}^k \omega_{0i} = 1\]

Then, we can redefine the predictor function as

\[h(x_0) \equiv \begin{cases}

1 & \text{ if } \sum_{i=1}^k \omega_{0i} x_{0i} \geq \frac{1}{2}\\

0 & \text{ if } \sum_{i=1}^k \omega_{0i} x_{0i} < \frac{1}{2}

\end{cases}\]

Generalized Linear Models

[Hide]

A generalized linear model (GLM) is a generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a link function and by allowing the magnitude of the variance of each measurement to be a function of its predicted value. Many first-timers would see a non-linear function being used for the regression model of a dataset and would wonder why this is called a linear model. It is called a generalized linear model because the outcome always depends on a linear combination of the inputs $x_i$ with the parameters $\theta_i$ as its coefficients! That is, a GLM will always be of form

\[h_\theta (x) \equiv g (\eta) \equiv g\big( \theta^T x \big)\]

Furthermore, we have made the following key assumptions for each case.![]()

Since we should be given what kind of distribution $y\,|\,x$ is (Gaussian, Bernoulli, Poisson, etc.), we can use its properties to calculate its expectation in terms of the distribution parameters $\xi_1, \xi_2, \ldots, \xi_k$. We refer to the $k$-vector of these parameters simply as $\xi$. So,

\begin{align*}

h(x) & = \mathbb{E} \big(y\,|\,x) \\

& = \mathfrak{g}_1 (\xi_1, \xi_2, \ldots, \xi_k) \\

& = \mathfrak{g}_1 (\xi)

\end{align*}

For example,

Since we should be given what kind of distribution $y\,|\,x$ is (Gaussian, Bernoulli, Poisson, etc.), we can use its properties to calculate its expectation in terms of the distribution parameters $\xi_1, \xi_2, \ldots, \xi_k$. We refer to the $k$-vector of these parameters simply as $\xi$. So,

\begin{align*}

h(x) & = \mathbb{E} \big(y\,|\,x) \\

& = \mathfrak{g}_1 (\xi_1, \xi_2, \ldots, \xi_k) \\

& = \mathfrak{g}_1 (\xi)

\end{align*}

For example,

Parameter fitting to get the optimal value of $\theta$ is easy. If we have a training set of $n$ examples $\{(x^{(i)}, y^{(i)}); i = 1, \ldots, n\}$ and would like to learn the parameters $\theta_i$ of this model, we would begin by writing down the log-likelihood \begin{align*} l(\theta) & = \sum_{i=1}^n \log p(y^{(i)}\,|\, x^{(i)}; \theta) \\ & = \sum_{i=1}^n \log \prod_{l=1}^k \Bigg( \frac{\exp (\theta_l^T x^{(i)})}{\sum_{j=1}^k \exp(\theta_j^T x^{(i)})}\Bigg)^{1\{y^{(i)} = 1\}} \end{align*} Assuming smoothness when necessary, we can use gradient ascent or some other numerical method to maximize $l$ in terms of $\theta$.

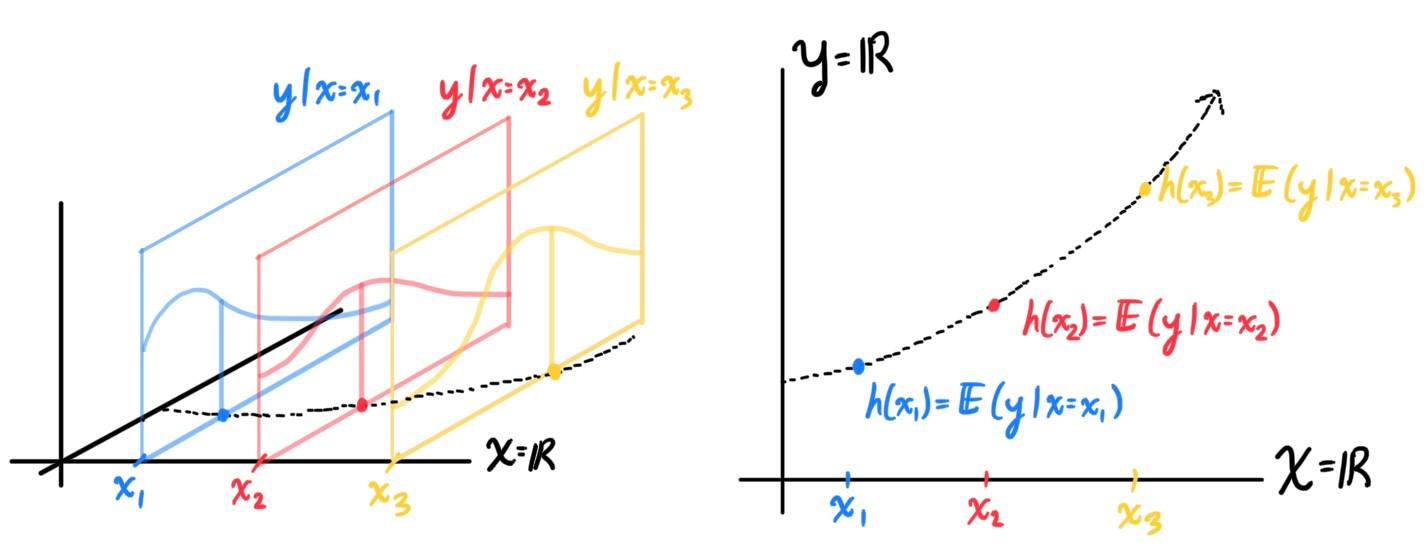

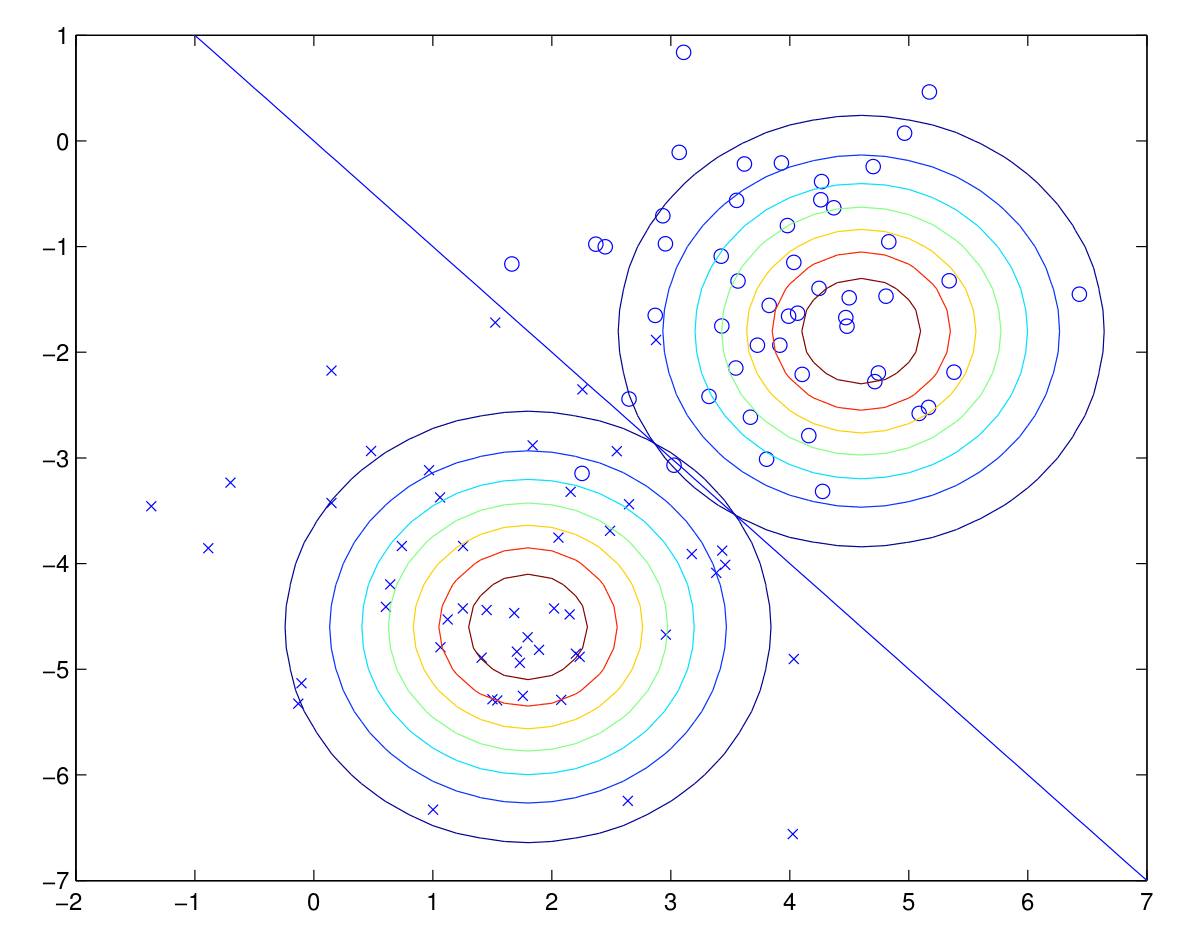

Motivation & Intuition

GLMs may be quite confusing to a first-timer, so let us clarify what we must know. Note that given some explanatory variable $x$, our job is to predict $y$ in the best way possible. However, because of random noise, we can never actually predict $y$ to 100% accuracy. A more accurate interpretation is that given an input $x$, we can, from basic assumptions about the specific problem, determine what distribution the response variable $y$ must follow. That is, the most comprehensive model really takes in a $x$ value and outputs a distribution that itself models the probabilities of $y$ having certain values. So, each outcome $y$ is assumed to be generated from this particular distribution. The best we can do with this distribution is just calculate the expectation, saying that "$y$ has the best chance to land at the mean of this distribution, even though it probably won't."

- In the regression example, we assumed that given input $x$, the output value $y$ will follow the Gaussian distribution: $y\,|\,x; \theta \sim \mathcal{N}(\mu, \sigma^2)$ (for the appropriate definition $\mu = \theta^T x$).

- In the classification example, we assumed that given input $x$, the output value $y$ will follow the Bernoulli distribution: $y\,|\,x; \theta \sim \text{Bernoulli}(\phi)$ (for the appropriate definition $\phi = \frac{1}{1 + e^{-\theta^T x}}$).

Furthermore, we have made the following key assumptions for each case.

- For linear regression, we have assumed that the expected value of the response variable (a random variable) is linear with respect to the set of observed values. This implies that a constant change in a predictor leads to a constant change in the response variable (i.e. a linear-response model) and is appropriate when the response variable can vary, to a good approximation, indefinitely in either direction, or more generally for any quantity that only varies by a relatively small amount compared to the variation in the predictive variables.

- A model that predicts a probability of making a yes/no choice (a Bernoulli variable) is not at all suitable as a linear-response model, since the output (probability) should be bounded on both ends. This is why the logistic model was chosen, which has the range $(0, 1)$ and is appropriate when a constant change leads to the probability being a certain number of times more likely (in contrast to merely increasing linearly).

The Exponential Family

We will delve into the exponential family (not to be confused with the exponential distribution) in full generality. Let $Y$ be a random variable that outputs a vector in a subset of $\mathbb{R}^m$. We can visualize $Y$ by imagining the output space $\mathbb{R}^m$ and a scalar field (representing the distribution of $Y$) defined by a density function:

\[p: \mathbb{R}^m \longrightarrow \mathbb{R}\]

that represents the probability $p(y)$ of $Y$ outputting a certain vector $y$. Furthermore, let the distribution of $y$ have $k$ parameters. For example,

- The univariate Gaussian $y \sim \mathcal{N}(\mu, \sigma^2)$ has $m=1$ and $k=2$ (the mean $\mu$ and variance $\sigma^2$).

- A regular $y \sim \text{Bernoulli}(\phi)$ has $m=1$ (since $\{0, 1\}$ is a subset of $\mathbb{R}$) and $k=1$ (just the $\phi$).

- A multinomial distribution $y \sim \text{Multinomial}(\phi_1, \phi_2, \ldots, \phi_{l-1})$ where $\phi_i$ represents the probability of the random variable landing in bin $i$ has $l-1$ scalar parameters, since the final value of $\phi_l = 1 - \sum_{i=1}^{l-1} \phi_i$. So, we have $m = 1$ (since $\{1, \ldots, l\} \subset \mathbb{R}$) and $k = l-1$ (since $\phi_1, \phi_2, \ldots, \phi_{l-1}$).

- $\eta \in \mathbb{R}^k$ is called the canonical parameter, with the same dimensionality as there are distribution parameters.

- $T(y)$ is the sufficient statistic vector (of function $T: \mathbb{R} \longrightarrow \mathbb{R}^k$), which also has the same dimensionality as $\eta$.

- $h(y)$ is non-negative scalar function.

- $A(\eta)$, known as the cumulant function, (more specifically, $e^{-A(\eta)}$) plays the role of a normalizing factor.

- For $y \sim \text{Bernoulli}(\phi)$, we define the density $p: \{0, 1\} \subset \mathbb{R} \longrightarrow \mathbb{R}$ as an extension of the codomain $\{0, 1\}$ into $\mathbb{R}$, with a scalar parameter value. \[p(y) = \phi^y\,(1 - \phi)^{1-y} = \exp\Bigg(\log\bigg(\frac{\phi}{1 - \phi}\bigg) \,y + \log\big(1 - \phi\big)\Bigg)\] which gives scalar values of both $\eta$ and $T(y)$, since there is only $k=1$ parameter. \[\begin{cases} \eta & = \log\bigg(\frac{\phi}{1 - \phi}\bigg) \\ T(y) & = y \\ A(\eta) & = - \log \big(1 - \phi \big) = \log \big(1 + e^\eta \big) \\ h(y) & = 1 \end{cases}\]

- For the univariate Gaussian distribution $y \sim \mathcal{N}(\mu, \sigma^2)$, we have \[p(y) = \frac{1}{\sigma \sqrt{2\pi}} \exp \bigg( -\frac{1}{2 \sigma^2} (y - \mu)^2 \bigg) = \frac{1}{\sqrt{2\pi}} \exp \bigg( \frac{\mu}{\sigma^2} y - \frac{1}{2 \sigma^2} y^2 - \frac{1}{2 \sigma^2} \mu^2 - \log (\sigma) \bigg)\] which gives both $\eta$ and $T(y)$ as 2-dimensional vectors, since there are $k=2$ parameters. \[\begin{cases} \eta & = \begin{pmatrix} \mu/\sigma^2 \\ -1/2\sigma^2 \end{pmatrix} \\ T(y) & = \begin{pmatrix} y \\ y^2 \end{pmatrix} \\ A(\eta) & = \frac{\mu^2}{2 \sigma^2} + \log(\sigma) = - \frac{\eta_1^2}{4 \eta_2} - \frac{1}{2} \log (-2\eta_2) \\ h(y) & = \frac{1}{\sqrt{2\pi}} \end{cases}\]

- For $y \sim \text{Poisson}(\lambda)$, we have the following form, with again $\eta$ and $T(y)$ scalars. \[p(y) = \frac{\lambda^y e^{-\lambda}}{y!} = \frac{1}{y!} \exp \big( y\,\log(\lambda) - \lambda \big) \implies \begin{cases} \eta & = \log(\lambda) \\ T(y) & = y \\ A(\eta) & = \lambda = e^\eta \\ h(y) & = \frac{1}{y!} \end{cases}\]

- For the multinomial distribution $y \sim \text{Multinomial}(\phi_1, \phi_2, \ldots, \phi_{k-1})$ with $k-1$ parameters (since the final parameter $\phi_k$ is determined as being equal to $1 - \sum_{i=1}^{k-1} \phi_i$), we can write the density (with a little bit of calculation) as: \begin{align*} p(y) & = \phi_1^{1\{y=1\}}\, \phi_2^{1\{y=2\}}\ldots \phi_{k-1}^{1\{y=k-1\}} \, \phi_{k}^{1 - \sum_{i=1}^{k-1} 1\{y=i\}} \\ & = \exp\bigg( \sum_{i=1}^{k-1} 1\{y=1\} \log \Big(\frac{\phi_i}{\phi_k}\Big) + \log(\phi_k) \bigg) \end{align*} where $1\{P\}$ is an indicator function that outputs $1$ if condition $P$ is met and $0$ if not. Since there are $k-1$ distribution parameters, this would mean that both $\eta$ and $T(y)$ would be $k-1$ dimensional. With more calculations, it turns out that we have \[\eta = \begin{pmatrix} \log \frac{\phi_1}{\phi_k} \\ \log \frac{\phi_2}{\phi_k} \\ \vdots \\ \log \frac{\phi_{k-1}}{\phi_k} \end{pmatrix}, \;\; T(y) = \begin{pmatrix} 1\{y=1\} \\ 1\{y=2\} \\ \vdots \\ 1\{y=k-1\} \end{pmatrix}, \;\; A(\eta) = -\log(\phi_k), \;\; h(y) = 1\] Remember that the number of parameters $k-1$ and the dimensionality ($1$) of the outcome space $\mathbb{R}$ of the random variable $Y$ are completely independent.

Constructing GLMs

Consider a classification or regression problem where we would like to predict the value of some random variable $y$ as a function of $x$. To derive a GLM for this problem, we will make the following three

assumptions about the conditional distribution of $y$ given $x$ and about our model:

- $y\,|\,x; \theta \sim \text{ExponentialFamily}(\eta)$, i.e. $y\,|\,x, \theta$ is some exponential family distribution

- Given $x$, our goal is to predict the expected value of $y$ given $x$, and so this means we would like the prediction $h(x)$ output by our learned hypothesis function $h$ to satisfy (this assumption is quite intuitively obvious) \[h(x) = \mathbb{E}\big(y\,|\,x\big)\]

- The natural parameter $\eta \in \mathbb{R}^k$ and the inputs $x \in \mathbb{R}^d$ are related linearly. That is, $\theta^T: \mathbb{R}^d \longrightarrow \mathbb{R}^k$. Let us label the columns of $\theta$ as $\theta_1, \theta_2, \ldots, \theta_{k}$. \[\eta = \theta^T x = \begin{pmatrix} | & | & \ldots & | \\ \theta_1 & \theta_2 & \ldots & \theta_{k} \\ | & | & \ldots & | \end{pmatrix}^T \begin{pmatrix} x_1 \\ x_2 \\ \vdots \\ x_d \end{pmatrix} \] This third assumption may seem the least well justified, so it can be considered as a "design choice" for GLMs.

Since we should be given what kind of distribution $y\,|\,x$ is (Gaussian, Bernoulli, Poisson, etc.), we can use its properties to calculate its expectation in terms of the distribution parameters $\xi_1, \xi_2, \ldots, \xi_k$. We refer to the $k$-vector of these parameters simply as $\xi$. So,

\begin{align*}

h(x) & = \mathbb{E} \big(y\,|\,x) \\

& = \mathfrak{g}_1 (\xi_1, \xi_2, \ldots, \xi_k) \\

& = \mathfrak{g}_1 (\xi)

\end{align*}

For example,

Since we should be given what kind of distribution $y\,|\,x$ is (Gaussian, Bernoulli, Poisson, etc.), we can use its properties to calculate its expectation in terms of the distribution parameters $\xi_1, \xi_2, \ldots, \xi_k$. We refer to the $k$-vector of these parameters simply as $\xi$. So,

\begin{align*}

h(x) & = \mathbb{E} \big(y\,|\,x) \\

& = \mathfrak{g}_1 (\xi_1, \xi_2, \ldots, \xi_k) \\

& = \mathfrak{g}_1 (\xi)

\end{align*}

For example,

- $Y \sim \mathcal{N}(\mu, \sigma^2) \implies \mathbb{E}(Y) = \mu$. In this case, $\mathfrak{g}_1 (\xi) = \mathfrak{g}_1 (\mu, \sigma) = \mu$.

- $Y \sim \text{Bernoulli}(\phi) \implies \mathbb{E}(Y) = \phi$. In this case, $\mathfrak{g}_1 (\xi) = \mathfrak{g}_1 (\phi) = \phi$.

- $Y \sim \text{Poisson}(\lambda) \implies \mathbb{E}(Y) = \lambda$. In this case, $\mathfrak{g}_1 (\xi) = \mathfrak{g}_1 (\lambda) = \lambda$

- etc.

Ordinary Least Squares with GLMs

Let us construct the ordinary least squares through GLMs. We consider the target variable $y$ to be continuous, and we model the conditional distribution of $y$ given $x$ as Gaussian $\mathcal{N}(\mu, \sigma^2)$, which is indeed an Exponential Family distribution. Remember that since the expectation of a Gaussian is simply $\mu$, and our earlier derivation showed that $\mu = \eta$, we have

\begin{align*}

h_\theta (x) & = \mathbb{E}\big(y \,|\, x; \theta\big) \\

& = \mu \\

& = \eta \;\;\;\;\; ( = g^{-1} (\eta))\\

& = \theta^T x

\end{align*}

and therefore our best-fit function form is

\[h_\theta (x) = \theta^T x\]

with $\theta$ optimized through other means. This form means that we can simply expect the $x$ and $y$s to be linearly correlated. Note that the response function $g^{-1}$ is the identity map. This is quite intuitive: Assumption 3 says that $x$ is related (affine, with dimension of $x$ being $d+1$) linearly to the natural parameter $\eta$, but $\eta = \mu$ in this case, so $x$ is related linearly to $\mu$! This means that a linear change in $x$ within $\mathbb{R}^d$ will result in a linear change in the mean of the conditional $y\,|\,x$. This results in a line.

Logistic Regression with GLMs

In logistic regression, we are interested in binary classification, so $y \in \{0, 1 \}$. Given that $y$ is binary-valued, it therefore seems natural to choose the Bernoulli family of distributions to model the conditional distribution of $y$ given $x$. In our formulation of the Bernoulli distribution as an exponential family distribution, we computed $\phi = 1/(1 + e^{-\eta})$. It is also obvious that if $y\,|\,x; \theta \sim \text{Bernoulli}(\phi)$, then $\mathbb{E}\big(y\,|\,x; \theta\big) = \phi$. So, we get

\begin{align*}

h_\theta (x) & = \mathbb{E}\big(y\,|\,x; \theta \big) \\

& = \phi \\

& = \frac{1}{1 + e^{-\eta}} = \frac{1}{1 + e^{-\theta^T x}}

\end{align*}

Therefore, a consequence of assuming that $y$ conditioned on $x$ is Bernoulli is that the hypothesis function is of form

\[h_\theta (x) = \frac{1}{1 + e^{-\theta^T x}}\]

Note that the canonical response ($g^{-1}$) and link functions ($g$) are:

\[g^{-1}(\eta) = \frac{1}{1 + e^{-\eta}}, \;\;\;\;\;\; g (\eta) = \log \bigg( \frac{\eta}{1 - \eta} \bigg)\]

Again, let us explain the intuition. Assumption 3 says that $x$ is related (affine, with dimension of $x$ being $d+1$) linearly to the natural parameter $\eta$, but because $\eta$ is related to $\phi$ through the sigmoid function, increasing $\eta$ by a certain value does not increase the expectation of the conditional $y\,|\,x$ linearly. If it did, then the expectation would be unbounded, which would be a problem for a classification problem. More importantly, we would like this classification model to predict that a change in, say 5 units, of a sample increases the probability of it being classified into bin $1$ by a factor of two. But what does "twice as likely" mean in terms of probability? It cannot literally mean to double the probability value (e.g. $50\%$ becomes $100\%$, $75\%$ becomes $150\%$, etc.). Rather, it is the odds that are doubling: from 2:1 odds to 4:1 odds, to 8:1 odds, etc. The model that best represents this change of odds described just now is precisely the logistic model with the sigmoid function.

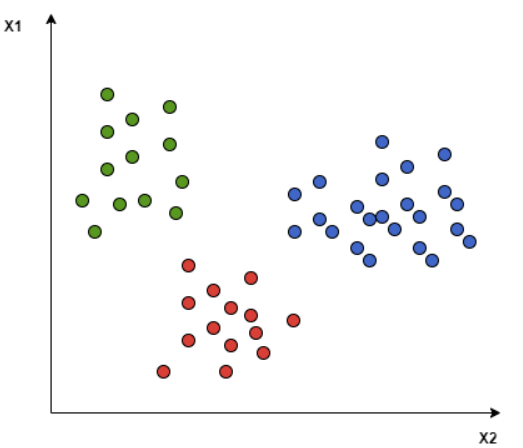

SoftMax Regression

In softmax regression, we are interested in a non-binary classification of input values with $d$ parameters, so let $y \in \{1, 2, 3, \ldots, k\}$ and let us try to find a hypothesis function $h: \mathcal{X} = \mathbb{R}^d \longrightarrow \mathcal{Y} = \{1, 2, \ldots, k\}$. It is natural to choose the multinomial family of distributions to model the conditional distribution of $y$ given $x$. Therefore, we can find that

\begin{align*}

h_\theta (x) & = \mathbb{E} \big( T(y) \,|\,x; \theta \big) \\

& = \mathbb{E} \Bigg( \begin{pmatrix} 1\{y=1\} \\ 1\{y=2\} \\ \vdots \\ 1\{y=k-1\} \end{pmatrix} \Bigg| x; \theta \Bigg) = \begin{pmatrix} \phi_1 \\ \phi_2 \\ \vdots \\ \phi_{k-1} \end{pmatrix}

\end{align*}

We must take this vector of $\phi_i$s and convert it into a form with $\eta_i$s. This is precisely what the link function is for (again, as a reminder, the link function gives the expectation of the conditional, written in parameters (e.g. $\phi$) of the distribution, as a function of the natural parameter $\eta$). We have found out that the multinomial distribution has

\[\eta = \begin{pmatrix} \eta_1 \\ \eta_2 \\ \vdots \\ \eta_{k-1} \end{pmatrix} = \begin{pmatrix} \log(\phi_1/\phi_k) \\ \log(\phi_2/\phi_k) \\ \vdots \\ \log(\phi_{k-1}/\log(\phi_k) \end{pmatrix} \implies \eta_i = \log \frac{\phi_i}{\phi_k} \text{ for } i = 1, 2, \ldots, k\]

Solving for $\phi_i$ and summing over all $i$, we get

\begin{align*}

\phi_i = \phi_k e^{\eta_i} & \implies 1 = \sum_{i=1}^k \phi_i = \phi_k \sum_{i=1}^k e^{\eta_i} \\

& \implies \phi_k = \frac{1}{\sum_{i=1}^k e^{\eta_i}} \\

& \implies \phi_i = \frac{e^{\eta_i}}{\sum_{i=1}^k e^{\eta_i}}

\end{align*}

So, we can see that our equation of best fit is

\[h_\theta (x) = \mathbb{E}\big( T(y) \,|\,x; \theta\big) = \begin{pmatrix} \phi_1 \\ \phi_2 \\ \vdots \\ \phi_{k-1} \end{pmatrix}

= \begin{pmatrix} \frac{\exp(\eta_1)}{\sum_{i=1}^k \exp(\eta_i)} \\ \frac{\exp(\eta_2)}{\sum_{i=1}^k \exp(\eta_i)} \\ \vdots \\ \frac{\exp(\eta_{k-1})}{\sum_{i=1}^k \exp(\eta_i)} \\ \end{pmatrix}

= \begin{pmatrix} \frac{\exp(\theta_1^T x)}{\sum_{i=1}^k \exp(\theta_i^T x)} \\ \frac{\exp(\theta_2^T x)}{\sum_{i=1}^k \exp(\theta_i^T x)} \\ \vdots \\ \frac{\exp(\theta_{k-1}^T x)}{\sum_{i=1}^k \exp(\theta_i^T x)} \\ \end{pmatrix} \]

Therefore, our hypothesis function above will output the estimated probability that $p(y = i\,|\,x; \theta)$, for every value of $i = 1, 2, \ldots, k$. Even though $h_\theta (x)$ as defined above is only $k-1$ dimensional, clearly $p(y = k\,|\,x; \theta)$ can be obtained as $1 - \sum_{i=1}^{k-1} \phi_i$).

Parameter fitting to get the optimal value of $\theta$ is easy. If we have a training set of $n$ examples $\{(x^{(i)}, y^{(i)}); i = 1, \ldots, n\}$ and would like to learn the parameters $\theta_i$ of this model, we would begin by writing down the log-likelihood \begin{align*} l(\theta) & = \sum_{i=1}^n \log p(y^{(i)}\,|\, x^{(i)}; \theta) \\ & = \sum_{i=1}^n \log \prod_{l=1}^k \Bigg( \frac{\exp (\theta_l^T x^{(i)})}{\sum_{j=1}^k \exp(\theta_j^T x^{(i)})}\Bigg)^{1\{y^{(i)} = 1\}} \end{align*} Assuming smoothness when necessary, we can use gradient ascent or some other numerical method to maximize $l$ in terms of $\theta$.

Poisson Regression

Poisson regression models are best used when modeling events where the dependent variable is a count, for example the instance of events such as the arrival of a telephone call or the number of times an event occurs during a given timeframe. We model the conditional distribution $y\,|\,x \sim \text{Poisson}(\lambda)$, and so we get

\begin{align*}

h_\theta(x) & = \mathbb{E} \big(y\,|\,x; \, \theta) \\

& = \lambda \\

& = e^{\eta} \\

& = e^{\theta^T x}

\end{align*}

Let's look at the intuition. Suppose a linear prediction model learns from some data that a 10 degree temperatre decrease would lead to 1,000 fewer people visiting the beach. This would not be a good model, since if a beach regularly has an attendance of 50 people, you would predict an impossible attendance value of -950 after a 10 degree temperature decrease. Logically, a more realistic model would instead predict a constant rate of increased beach attendance (e.g. an increase in 10 degrees leads to a doubling in beach attendance, and a drop in 10 degrees leads to a halving in attendance). (Question: Still not sure on why this conditional distribution assumption exactly leads to an exponential expectation function)

Generative Learning Algorithms: Gaussian Discriminant Analysis

[Hide]

There are two types of supervised learning algorithms used for classification in machine learning:

Comparing the applicability of GDA with logistic regression...

Comparing the applicability of GDA with logistic regression...

- Discriminative Learning Algorithms, which try to find a decision boundary between different classes during the learning process. algorithms that try to learn $\mathbb{P}(y\,|\,x)$ directly (e.g. logistic regression) or algorithms that try to learn mappings directly from the space of inputs $\mathcal{X}$ to the labels $\{0, 1\}$ (e.g. perceptron).

- Generative Learning Algorithms, which attempt to capture the distribution of each class separately instead of finding a decision boundary. Say, for a binary classification model, $\mathbb{P}(x\,|\,y=0)$ models the distribution of features of samples in bin $0$ in $\mathcal{X}$, and $\mathbb{P}(x\,|\,y=1)$ models the distribution of features of samples in bin $1$ in $\mathcal{X}$. In general, rather than trying to learn $\mathbb{P}(y\,|\,x)$, it learns $\mathbb{P}(x\,|\,y)$. After modeling $\mathbb{P}(x\,|\,y)$ and $\mathbb{P}(y)$, our algorithm can then use Bayes rule to derive the posterior distribution on $y$ given $x$: \[\mathbb{P}(y\,|\,x) = \frac{\mathbb{P}(x\,|\,y) \; \mathbb{P}(y)}{\mathbb{P}(x)}\]

Multivariate Gaussian Distributions

The multivariate Gaussian distribution in $d$-dimensions is parameterized by a mean vector $\mu \in \mathbb{R}^d$ and a covariance matrix $\Sigma \in \text{Mat}(d \times d, \mathbb{R})$ that is symmetric and positive semi-definite (note that by the spectral theorem, this implies that $\Sigma$ has $d$ orthogonal eigenvectors with all positive eigenvalues). We can write a random variable that has a $d$-dimensional Gaussian distribution as

\[X \sim \mathcal{N}_d (\mu, \Sigma)\]

with density

\[p(x;\;\mu, \Sigma) = \frac{1}{(2\pi)^{d/2} |\Sigma|^{1/2}} \exp\bigg( -\frac{1}{2} (x - \mu)^T \Sigma^{-1} (x - \mu)\bigg)\]

where $|\Sigma|$ denotes the determinant of the matrix $\Sigma$ (note that $\Sigma$ is guaranteed to be nonsingular). It is clear that $\mathbb{E}(X) = \mu$, and the covariance of this vector-valued random variable, defined

\[\text{Cov}(X) \equiv \mathbb{E} \big( (X - \mathbb{E}(X))(X - \mathbb{E}(x))^T\big) = \mathbb{E}\big( XX^T\big) - \big(\mathbb{E}(X)\big)\big(E(X)\big)^T\]

gives the square matrix $\Sigma$. Geometrically, we can interpret the covariance matrix as determining the elliptical shape of the Gaussian, where the (orthonormal) eigenvectors, scaled by their respective eigenvalues, determine the axes of the ellipse (not quite sure: check math on this). For example, in a 2-dimensional Gaussian, let us have $\mu = 0$ for all three examples, but let $\Sigma$ be different with the following eigendecompositions (with normalized eigenvectors).

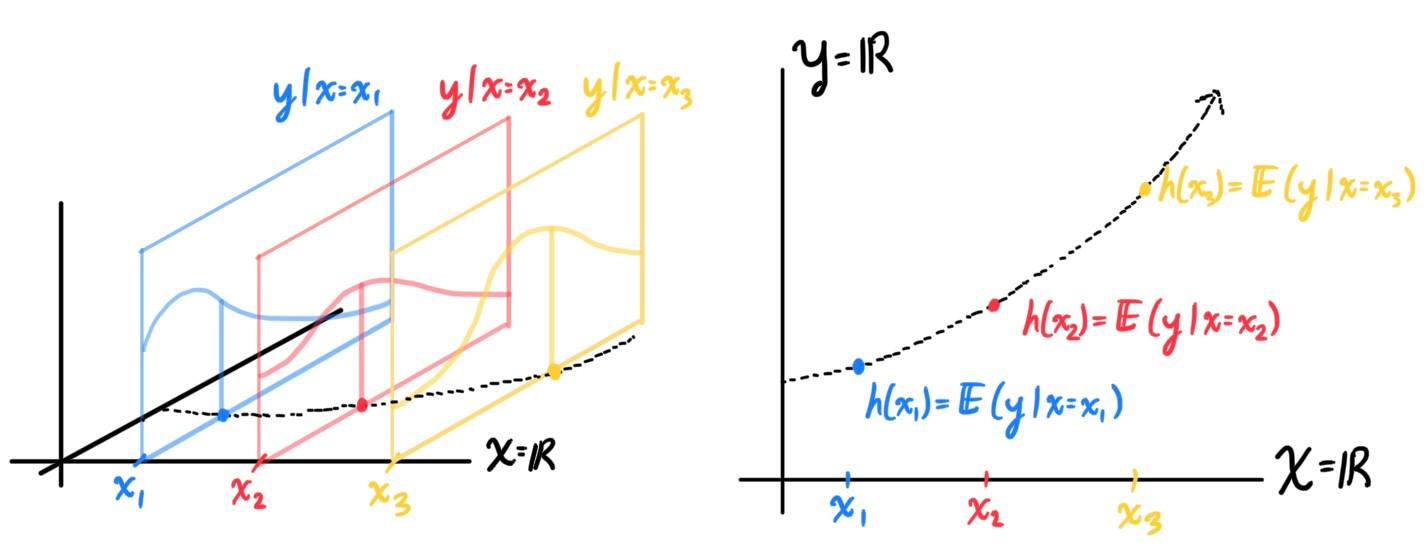

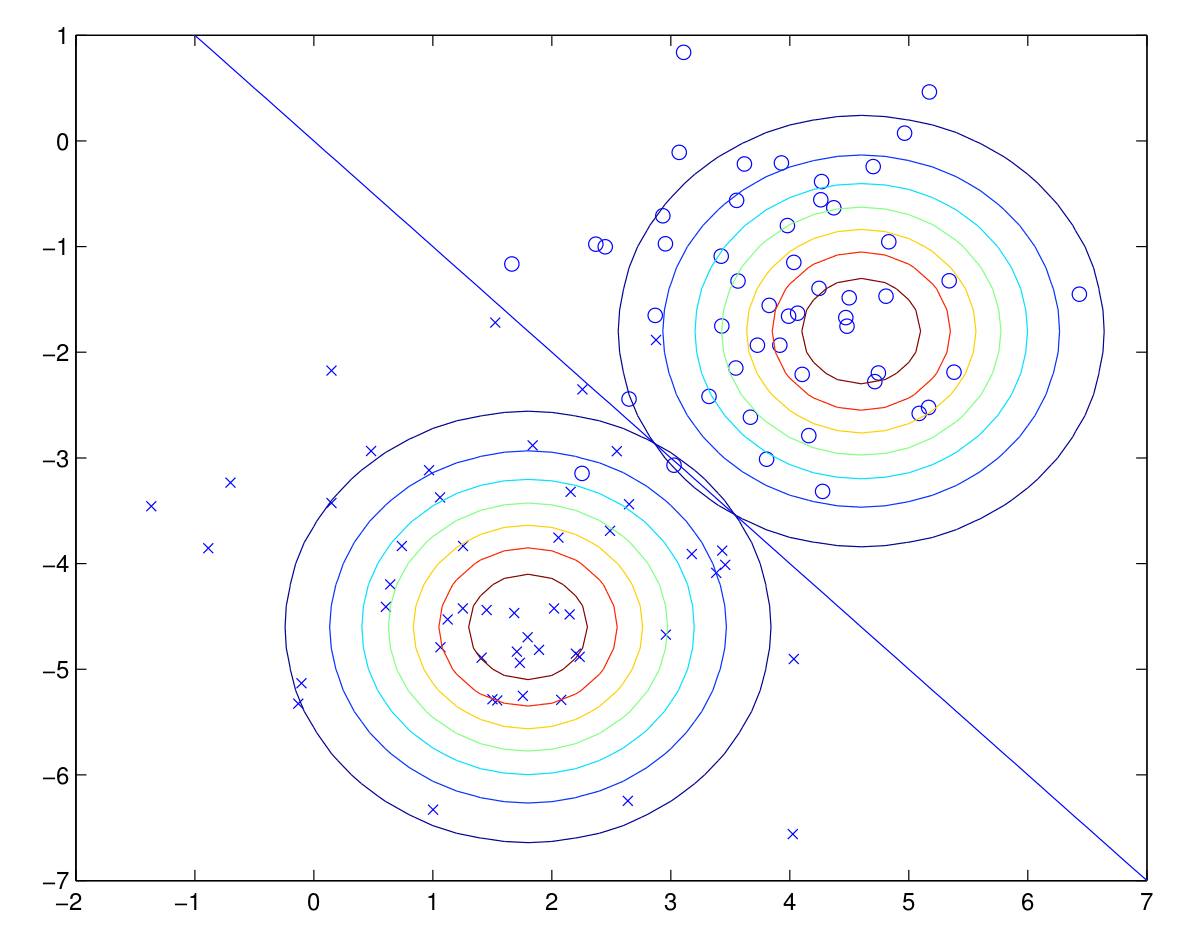

Gaussian Discriminant Analysis

GDA assumes that $\mathbb{P}(x\,|y)$ is distributed according to a multivariate Gaussian distribution. Let us assume that the input space is $d$-dimensional and this is a binary classification problem. We set

\begin{align*}

y & \sim \text{Bernoulli}(\phi) \\

x\,|\,y = 0 & \sim \mathcal{N}_d (\mu_0, \Sigma) \\

x\,|\,y = 1 & \sim \mathcal{N}_d (\mu_1, \Sigma)

\end{align*}

This method is usually applied using only one covariance matrix $\Sigma$. The distributions are

\begin{align*}

p(y) & = \phi^y (1 - \phi)^{1-y} \\

p(x\,|\,y = 0) & = \frac{1}{(2\pi)^{d/2} |\Sigma|^{1/2}} \exp \bigg(-\frac{1}{2} (x - \mu_0)^T \Sigma^{-1} (x - \mu_0)\bigg) \\

p(x\,|\,y= 1) & = \frac{1}{(2\pi)^{d/2} |\Sigma|^{1/2}} \exp \bigg(-\frac{1}{2} (x - \mu_1)^T \Sigma^{-1} (x - \mu_1)\bigg)

\end{align*}

Now, what we have to do is optimize the distribution parameters $\phi \in (0, 1) \mathbb{R}, \mu_0 \in \mathbb{R}^d, \mu_1 \in \mathbb{R}^d, \Sigma \in \text{Mat}(d \times d, \mathbb{R}) \simeq \mathbb{R}^{d \times d}$ so that we get the best-fit model. Assuming that each sample has been picked independently, this is equal to maximizing

\[L(\phi, \mu_0, \mu_1, \Sigma) = \prod_{i=1}^n \mathbb{P}\big( x^{(i)}, y^{(i)}\,;\, \phi, \mu_0, \mu_1, \Sigma\big)\]

which is really just the probability that we get precisely all these training samples $(x^{(i)}, y^{(i)})$ given the 4 parameters. This can be done by optimizing its log-likelihood, which is given by

\begin{align*}

l(\phi, \mu_0, \mu_1, \Sigma) & = \log \prod_{i=1}^n \mathbb{P}(x^{(i)}, y^{(i)}; \phi, \mu_0, \mu_1, \Sigma) \\

& = \log \prod_{i=1}^n \mathbb{P}( x^{(i)} \,|\, y^{(i)}; \mu_0, \mu_1, \Sigma) \, \mathbb{P}(y^{(i)}; \phi) \\

& = \sum_{i=1}^n \log \bigg( \mathbb{P}( x^{(i)} \,|\, y^{(i)}; \mu_0, \mu_1, \Sigma) \, \mathbb{P}(y^{(i)}; \phi) \bigg)

\end{align*}

and therefore gives the maximum likelihood estimate to be

\begin{align*}

\phi & = \frac{1}{n} \sum_{i=1}^n 1\{y^{(i)} = 1\} \\

\mu_0 & = \frac{\sum_{i=1}^n 1\{y^{(i)} = 0\} x^{(i)}}{\sum_{i=1}^n 1\{y^{(i)} = 0 \}} \\

\mu_1 & = \frac{\sum_{i=1}^n 1\{y^{(i)} = 1\} x^{(i)}}{\sum_{i=1}^n 1\{y^{(i)} = 1 \}} \\

\Sigma & = \frac{1}{n} \sum_{i=1}^n (x^{(i)} - \mu_{y^{(i)}}) (x^{(i)} - \mu_{Y^{(i)}})^T

\end{align*}

A visual of the algorithm is below, with contours of the two Gaussian distributions, along with the straight line giving the decision boundary at which $\mathbb{P}(y=1\,|\,x) = 0.5$.

Comparing the applicability of GDA with logistic regression...

Comparing the applicability of GDA with logistic regression...

- GDA makes the stronger assumption about the data being distributed normally. If these assumptions are (at least approximately) correct, GDA performs much better and is more data efficient (i.e. requires less training data to learn well).

- Logistic regression makes significantly weaker assumptions, but it is more robust and less sensitive to incorrect modeling asssumptions.

Naive Bayes & Laplace Smoothing

[Hide]

In GDA, we worked in a sample space $\mathcal{X} = \mathbb{R}^d$, where the feature vectors $x$ were continuous and real-valued. In the case where the sample space is discrete-valued (e.g. $\mathcal{X} = \{0, 1\}^n$),

Kernel Methods

[Hide]Polynomial LMS Regression with Feature Mappings

Let us recall that given $n$ training samples $(x^{(i)}, y^{(i)})$, with the inputs having $d$ scalar parameters, encoded in the form of vector

\[x^{(i)} = \begin{pmatrix} x^{(i)}_1 \\ x^{(i)}_2 \\ \vdots \\ x^{(i)}_d \end{pmatrix}\]

say that we want to make a degree-$p$ polynomial of best fit for the data. The following feature map $\phi: \mathbb{R}^d \longrightarrow \mathbb{R}^{\tilde{p}}$ maps these $d$ attributes to a vector containing all possible monomials with degree $\leq p$.

\[\phi: \begin{pmatrix} x_1^{(i)} \\ x_2^{(i)} \\ \vdots \\ x_d^{(i)} \end{pmatrix} \mapsto \begin{pmatrix}

1 \\ x_1^{(i)} \\ x_2^{(i)} \\ \ldots \\ (x_{d-1}^{(i)})^{p-1} x_d \\ (x_d^{(i)})^p

\end{pmatrix}\]

where the dimension of the vector $\phi(x)$ is some $\tilde{p}$ that is on the order of $d^p$. We can optimize $\theta$ to solve the least-squares equation

\[X \theta = y \iff \begin{pmatrix}

— & \phi(x^{(1)}) & — \\

— & \phi(x^{(2)}) & — \\

\vdots & \vdots & \vdots \\

— & \phi(x^{(n)}) & —

\end{pmatrix} \begin{pmatrix} \theta_1 \\ \theta_2 \\ \vdots \\ \theta_{\tilde{p}} \end{pmatrix} = \begin{pmatrix} y^{(1)} \\ y^{(2)} \\ y^{(3)} \\ \vdots \\ y^{(n)} \end{pmatrix}\]

Recall that the (batch) gradient descent update of the LMS linear fit, where we must fit the model $h_\theta = \theta^T x$ in terms of the $d$ input attributes $x$, is

\begin{align*}

\theta & = \theta + \alpha \sum_{i=1}^n \big( y^{(i)} - h_\theta(x^{(i)})\big) \, x^{(i)} \\

& = \theta + \alpha \sum_{i=1}^n \big(y^{(i)} - \theta^T x^{(i)} \big)\, x^{(i)}

\end{align*}

Since polynomial regression is really just a linear fit in terms of the $\tilde{p}$ features variables $\phi(x)$ (not the $d$ input attributes $x$), we just need to simply fit the function $\theta^T \phi(x)$ (where $\theta \in \mathbb{R}^{\tilde{p}}$). This gives us the update rule

\begin{align*}

\theta & = \theta + \alpha \sum_{i=1}^n \big( y^{(i)} - h_\theta(x^{(i)})\big) \, x^{(i)} \\

& = \theta + \alpha \sum_{i=1}^n \big(y^{(i)} - \theta^T \phi(x^{(i)}) \big)\, x^{(i)}

\end{align*}

However, the explosion in dimensionality of $\phi(x)$ and of $\theta$ (as $p$ and $d$ gets large) renders this algorithm computationally inefficient. Updating every entry of $\theta$ after each update of batch GD (or even stochastic GD) can be inefficient.

Feature Maps

Let us clarify some properties of feature maps here. Note that the entire reason we construct a feature map is to account for a higher-order relationship between the input attributes $x_1, x_2, \ldots, x_d \in \mathbb{R}$ and the outcome $y \in \mathbb{R}$. For example, for the 2 input attributes encoded in vector $x = \big(x_1 \;\; x_2\big)$, to construct the full feature map that accounts for all monomials of $x_1$ and $x_2$ of degree $\leq p = 2$, we can see that

- There are $2^0 = 1$ terms that have degree $0$: $1$

- There are $2^1 = 2$ terms that have degree $1$: $x_1, x_2$

- There are $2^2 = 4$ terms that have degree $2$: $x_1^2, x_1 x_2, x_2 x_1, x_2^2$

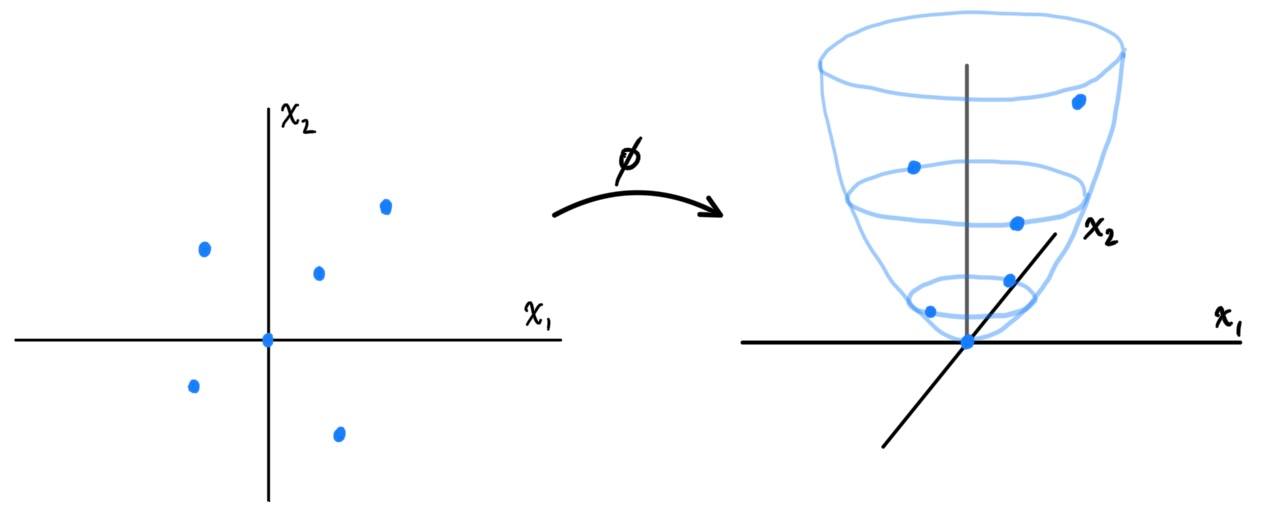

It turns out we have some flexibility on choosing the feature map $\phi$. Rather than accounting for all combinations of the $x_i$'s, we can decide that the "relevant" variables are just $x_1, x_2, x_1^2, x_1 x_2$ and define $\phi: \mathbb{R}^2 \longrightarrow \mathbb{R}^4$ \[\phi\begin{pmatrix} x_1 \\ x_2 \end{pmatrix} = \begin{pmatrix} x_1 \\ x_2 \\ x_1^2 \\ x_1 x_2 \end{pmatrix} \] or we can even decide that $x_1 x_2, x_2 x_1$ are completely irrelevant, and it is really just $x_1, x_2$, and $x_1^2 + x_2^2$ that are the relevant feature inputs. \[\phi\begin{pmatrix} x_1 \\ x_2 \end{pmatrix} = \begin{pmatrix} x_1 \\ x_2 \\ x_1^2 + x_2^2 \end{pmatrix}\] which can be visualized as such below.

Generalizing this to $d$ input attributes in $x = \big( x_1 \ldots x_d \big)$, to construct the full feature map that accounts for all monomials of $x_1, \ldots, x_d$, we can see that

Generalizing this to $d$ input attributes in $x = \big( x_1 \ldots x_d \big)$, to construct the full feature map that accounts for all monomials of $x_1, \ldots, x_d$, we can see that

- There are $d^0 = 1$ terms that have degree $0$: 1

- There are $d^1 = d$ terms that have degree $1$: $x_1, x_2, \ldots, x_d$

- There are $d^2$ terms that have degree $2$: $x_1 x_1, x_1 x_2, \ldots, x_d x_{d-1}, x_d x_d$

- ...

- There are $d^p$ terms that have degree $p$: $x_1^p x_1^{p-1} x_2, \ldots, x_{d}^{p-1} x_{d-1}, x_d^{p}$

LMS with Kernel Trick

For simplicity, we first initialize $\theta = 0$ (the $\tilde{p}$-vector of all entries $0$), and we look at the update rule:

\[\theta = \theta + \alpha \sum_{i=1}^n \big(y^{(i)} - \theta^T \phi(x^{(i)}) \big)\, x^{(i)}\]

We claim that $\theta$ is always, after every update, is a linear combination of $\phi(x^{(1)}), \ldots, \phi(x^{(n)})$, since by induction we have $\theta = 0 = \sum_{i=1}^0 0 \cdot \phi(x^{(i)})$ at initialization and assuming $\theta$ can be represented as

\[\theta = \sum_{i=1}^n \beta_i \phi(x^{(i)})\]

for some $\beta_1, \beta_2, \ldots, \beta_n \in \mathbb{R}$, we can see that the updated term

\begin{align*}

\theta & = \theta + \alpha \sum_{i=1}^n \big(y^{(i)} - \theta^T \phi(x^{(i)})\big) \,\phi(x^{(i)}) \\

& = \sum_{i=1}^n \beta_i \phi(x^{(i)}) + \alpha \sum_{i=1}^n \big(y^{(i)} - \theta^T \phi(x^{(i)})\big)\,\phi(x^{(i)}) \\

& = \sum_{i=1}^n \Big( \beta_i + \alpha \big(y^{(i)} - \theta^T \phi(x^{(i)})\big) \Big) \, \phi(x^{(i)})

\end{align*}

is also a linear combination of the $\phi(x^{(i)})$. This immediately implies that this update function maps the subspace $V = \text{span}\big(\phi(x^{(1)}), \ldots, \phi(x^{(n)}) \big)$ onto itself (but it is not linear). That is, $\theta \in V \implies$ the updated $\theta$ will also be in $V$. Furthermore, we see that the new $\beta_i$s are updated accordingly, and substituting $\theta = \sum_{j=1}^n \beta_j \phi(x^{(j)})$ gives

\begin{align*}

\beta_i & = \beta_i + \alpha \bigg( y^{(i)} - \sum_{j=1}^n \beta_j \big(\phi(x^{(j)})\big)^T\; \phi(x^{(i)}) \bigg) \\

& = \beta_i + \alpha \bigg( y^{(i)} - \sum_{j=1}^n \beta_j \big\langle\phi(x^{(j)}), \;\phi(x^{(i)})\big\rangle \bigg)

\end{align*}

where $\langle\cdot, \; \cdot \rangle$ represents the Euclidean inner product of $\mathbb{R}^d$. This inner product turns out to be very important, so we will define the Kernel function to be a map $F: \mathcal{X} \times \mathcal{X} \longrightarrow \mathbb{R}$ satisfying

\[K(x, z) \equiv \big\langle \phi(x), \phi(z) \big\rangle\]

for some feature map $\phi$. In this context, we would like to calculate the Kernels of all pairs of the $n$ input features $K\big( \phi(x^{(i)}), \phi(x^{(j)}) \big)$ for all $i, j = 1, \ldots, n$, so for convenience, we can store their values in the $n \times n$ Kernel matrix $\mathbf{K}$, defined

\[\mathbf{K_{ij}} \equiv K\big( x^{(i)}, x^{(j)}\big) \equiv \big\langle \phi(x^{(i)}), \phi(x^{(j)}) \big\rangle\]

(Note that this is analogous to the definition of a metric tensor in differential geometry.) Therefore, we can rewrite the update rule for GD to be

\[\beta_i = \beta_i + \alpha \Bigg( y^{(i)} - \sum_{j=1}^n \beta_j \, K(x^{(i)}, x^{(j)}) \Bigg)\]

or in vector notation,

\[\beta = \beta + \alpha (y - \mathbf{K} \beta)\]

We may have rewritten this in the form of a Kernel, but there is still the computational problem in calculating $K$, which requires us to deal with caluclating the high-dimensional $\phi$. Normally, to calculate each $K \big( x^{(i)}, x^{(j)} \big)$, we would have to find the coordinate representation of $\phi(x^{(i)}), \phi(x^{(j)})$ in the extremely high-dimensional vector space, and then sum the products of all of its elements. However, it is possible to directly calculate this inner product with this mathematical trick: For any $x, z \in \mathbb{R}^d$ and feature map $\phi$ mapping $x, z$ to vectors containing all the monomials of $x, z$ of degree $\leq p$, we have

\begin{align*}

\big\langle \phi(x), \phi(z) \big\rangle & = 1 + \sum_{i=1}^d x_i z_i + \sum_{i, j \in \{1, \ldots, d\}} x_i x_j z_i z_j + \ldots + \sum_{i_1, \ldots, i_p \in \{1, \ldots, d\}} \bigg(\prod_{\alpha=1}^p x_{i_\alpha} \bigg)\bigg(\prod_{\gamma = 1}^p z_{i_\gamma} \bigg) \\

& = 1 + \sum_{i=1}^d x_i z_i + \Bigg( \sum_{i=1}^d x_i z_i \Bigg)^2 + \ldots + \Bigg( \sum_{i=1}^d x_i z_i \Bigg)^p \\

& = 1 + \big\langle x, z \big\rangle + \big\langle x, z\big\rangle^2 + \ldots + \big\langle x, z \big\rangle^p

\end{align*}

Therefore, this is an extremely simple computation compared to what we did before. By simply calculating

\[K\big(x^{(i)}, x^{(j)}\big) = \sum_{k=0}^p \big\langle x^{(i)}, x^{(j))} \big\rangle^p \iff \mathbf{K} = \begin{pmatrix}

\sum_{k=0}^p \big\langle x^{(1)}, x^{(1))} \big\rangle^p & \ldots & \sum_{k=0}^p \big\langle x^{(1)}, x^{(n))} \big\rangle^p \\

\vdots & \ddots & \vdots \\

\sum_{k=0}^p \big\langle x^{(n)}, x^{(1))} \big\rangle^p & \ldots & \sum_{k=0}^p \big\langle x^{(n)}, x^{(n))} \big\rangle^p

\end{pmatrix}\]

and running the recursive algorithm

\[\beta = \beta + \alpha \big(y - \mathbf{K} \beta \big)\]

we can update $\beta$ efficiently with $O(n)$ time per update. Since $\beta$ are just the coordinates of the $\tilde{p}$-dimensional $\theta$ with respect to basis $\phi(x^{(i)})$, we can do a change of basis to find $\theta$.

\[\theta = \beta_1 \phi(x^{(1)}) + \beta_2 \phi(x^{(n)}) + \ldots + \beta_n \phi(x^{(n)}) \in \mathbb{R}^{\tilde{p}}\]

Therefore, the polynomial best-fit function $\theta^T \phi(x)$ can be written as

\[\theta^T \phi(x) = \bigg(\sum_{i=1}^n \beta_i \big(\phi(x^{(i)})\big)^T \bigg) \; \phi(x) = \sum_{i=1}^n \beta_i \, K(x^{(i)}, x)\]

Therefore, all we need to know about feature map $\phi(\cdot)$ is encapsulated in the corresponding kernel function $K$.

Constructing Kernels: Necessary & Sufficient Conditions for Valid Kernels

We can see that the best fit function

\[\theta^T \phi(x) = \sum_{i=1}^n \beta_i K\big( x^{(i)}, x \big)\]

is completely dependent on how the kernel function $K$ is defined. Everything we need to know about the feature map $\phi(\cdot)$ is encapsulated in the corresponding Kernel function $K(\cdot, \cdot)$. It is obvious that the existence of a feature map $\phi$ implies that $K$ is well-defined (because, well, $K$ is defined using the feature map).

\[\exists \phi \implies \exists K(\cdot, \cdot) \equiv \big\langle \phi(\cdot), \phi(\cdot) \big\rangle\]

But what about the other way around? What kinds of kernel functions $K(\cdot, \cdot)$ correspond to some feature map $\phi$? Given any kernel function $K$, can we determine the existence (not necessarily need to explicitly write it down) of some feature mapping $\phi$ such that $K(x, z) = \langle \phi(x), \phi(z) \rangle$ for all $x, z$?

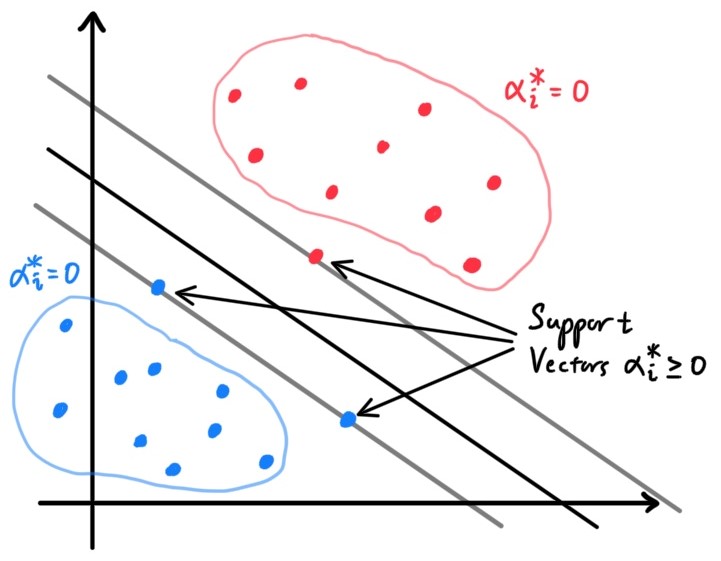

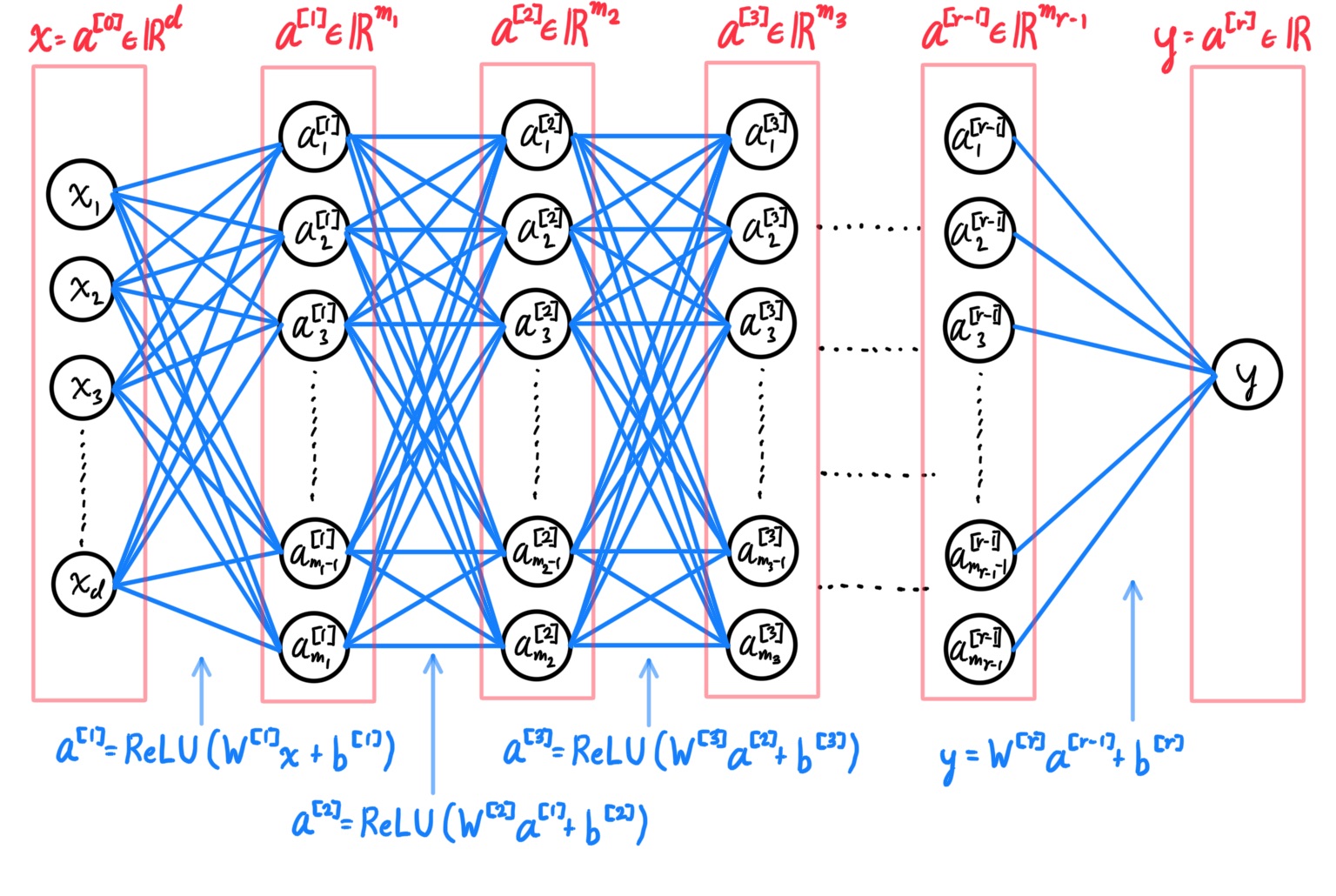

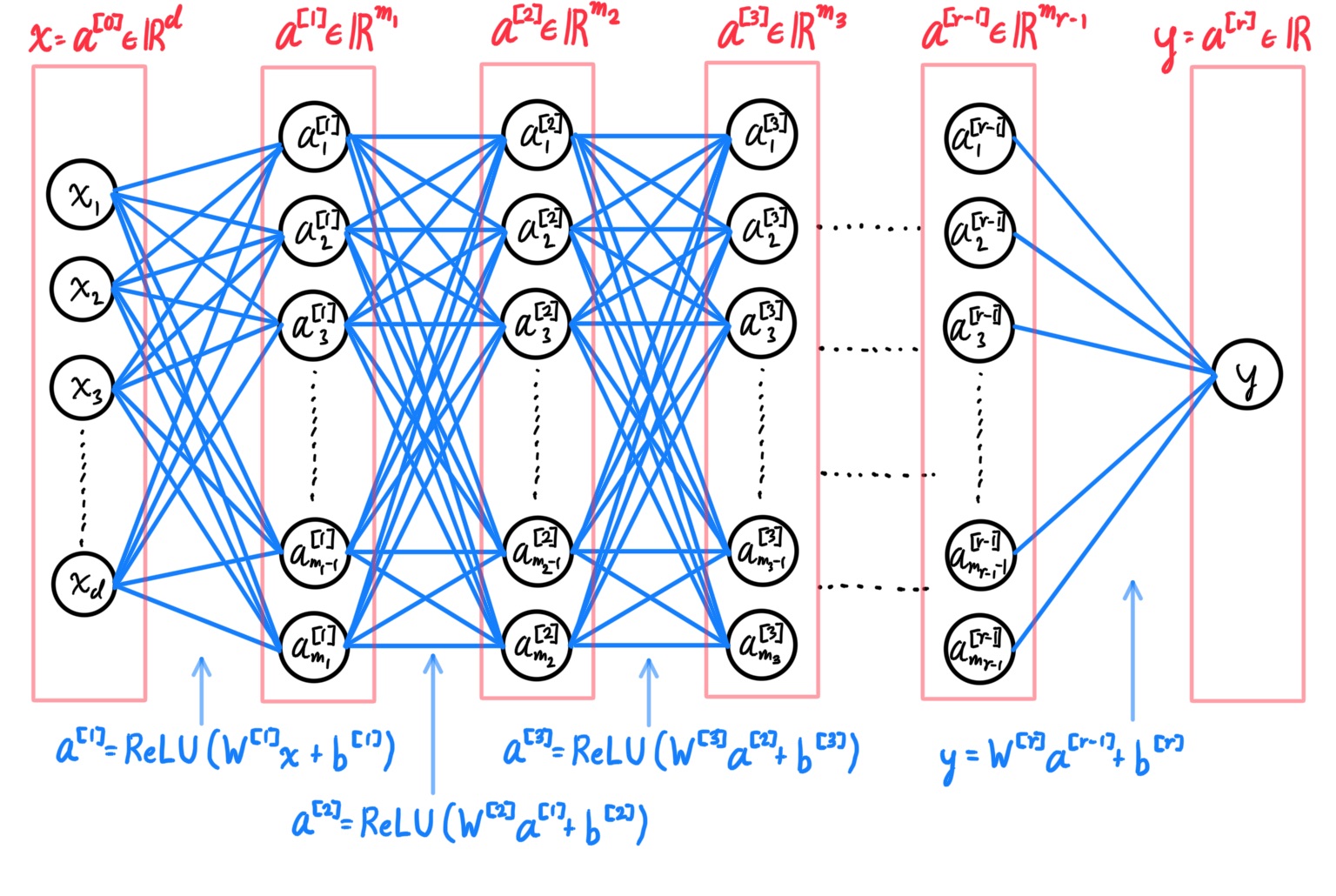

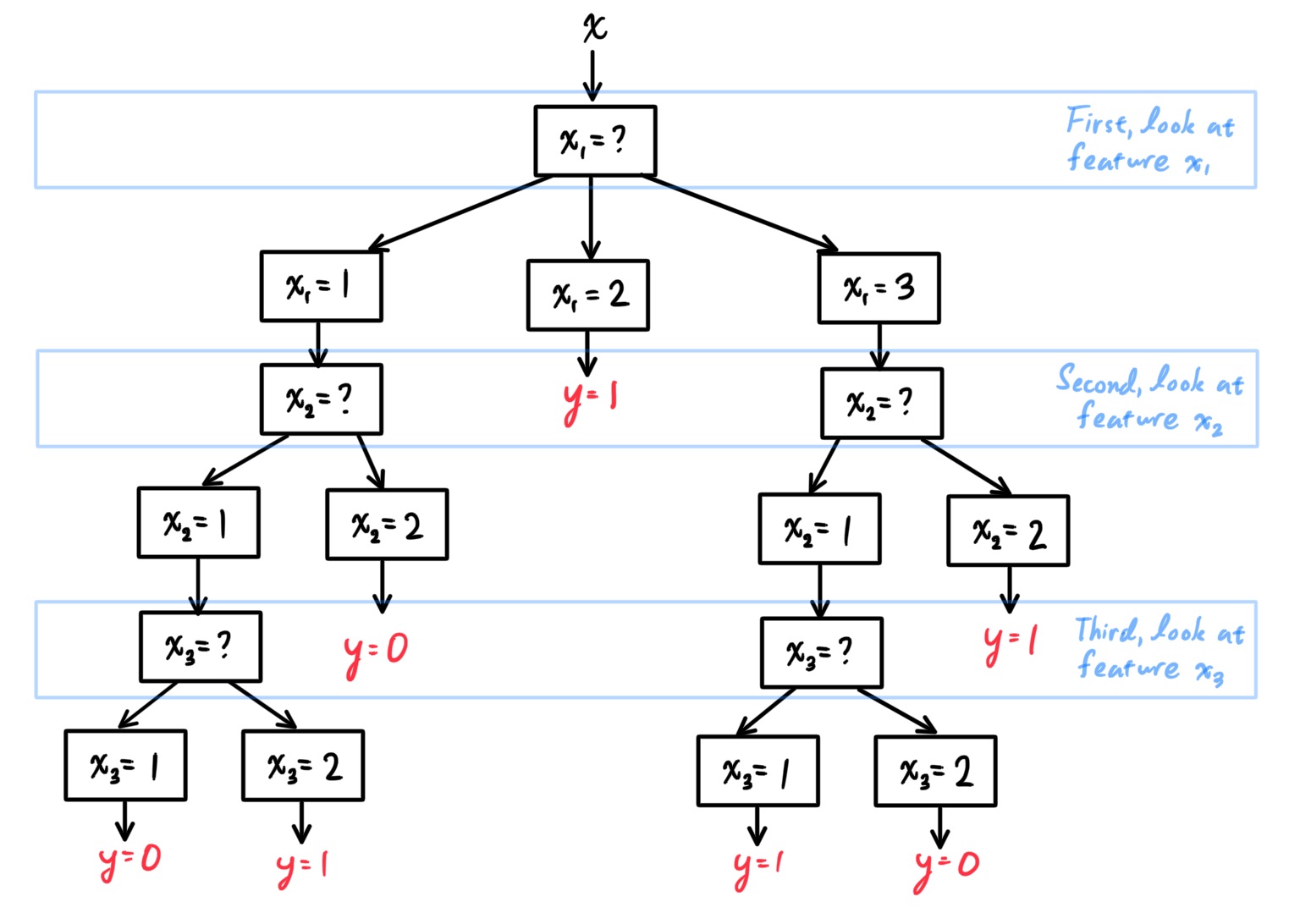

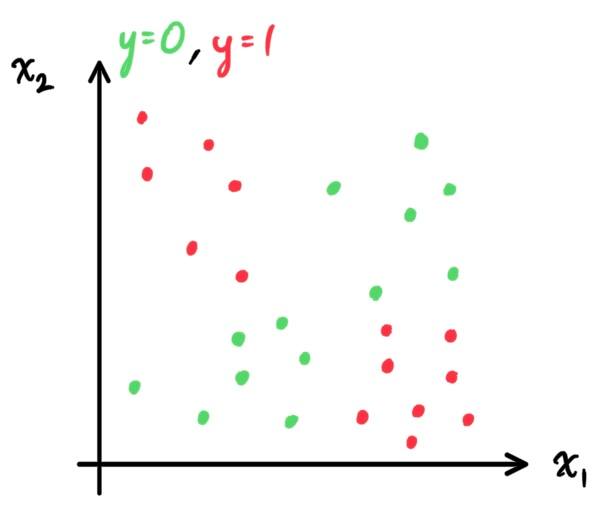

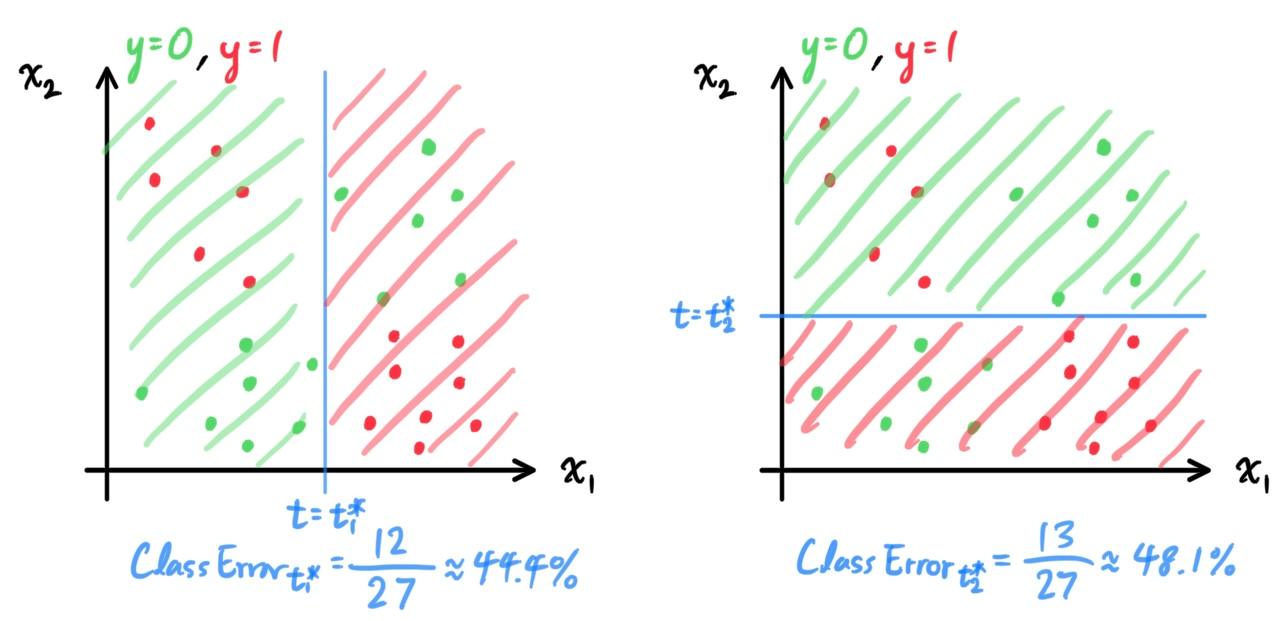

For example, for $d=3$, the following definition of $K$ gives $\tilde{p} = 3^2 = 9$ and \[K(x, z) \equiv \langle x, z \rangle^2 = \sum_{i, j = 1}^d (x_i x_j) (z_i z_j) \implies \phi(x) = \begin{pmatrix} x_1^2 \\ x_1 x_2 \\ x_1 x_3 \\ x_2 x_1 \\ x_2^2 \\ x_2 x_3 \\ x_3 x_1 \\ x_3 x_2 \\ x_3^2 \end{pmatrix}\] For another example, with $d=3$ an, the following definition of $K$ gives $\tilde{p} = 3^2 + 3 + 1 = 13$ and gives \[K(x, z) \equiv \big( \langle x, z\rangle + c \big)^2 = \sum_{i, j = 1}^d (x_i x_j)(z_i z_j) + \sum_{i = 1}^d (\sqrt{2c} x_i) (\sqrt{2c} z_i) + c^2\] which gives the 13-vector (written as a row to save space) \[\phi(x) \equiv \begin{pmatrix} x_1^2 & x_1 x_2 & x_1 x_3 & x_2 x_1 & x_2^2 & x_2 x_3 & x_3 x_1 & x_3 x_2 & x_3^2 & \sqrt{2c} x_1 & \sqrt{2c} x_2 & \sqrt{2c} x_3 & c \end{pmatrix}^T\] with the parameter $c$ controlling the relative weighting between the first order and second order terms.